When you use a large language model (LLM) like GPT-4 or Claude 3 in production, you don’t pay for a license. You pay for what you use. And what you use changes-sometimes wildly-from one minute to the next. That’s why billing for LLMs isn’t like billing for software. It’s more like paying for electricity: you get charged per unit consumed, and your bill can spike if you suddenly run a thousand requests in an hour. This isn’t theoretical. Companies are losing money, overcharging customers, or facing angry support tickets because their billing systems can’t keep up with how their users actually behave.

What Gets Measured? It’s Not Just Words

Most people think LLM billing is about how many words you type. It’s not. It’s about tokens. A token is a chunk of text-could be a word, part of a word, or even punctuation. The word "unhappiness" might break into three tokens: "un", "happ", "iness". Input tokens (what you send in) and output tokens (what the model generates) are tracked separately because they cost differently. Output tokens usually cost more because generating text takes more compute.

But tokens aren’t the whole story. Your bill also includes:

- Compute minutes (how long the GPU or TPU ran)

- Model type (using GPT-4-turbo costs 3x more than GPT-3.5-turbo)

- Image processing (if you’re sending visuals, each image adds cost)

- Storage (if you’re saving prompts, responses, or embeddings)

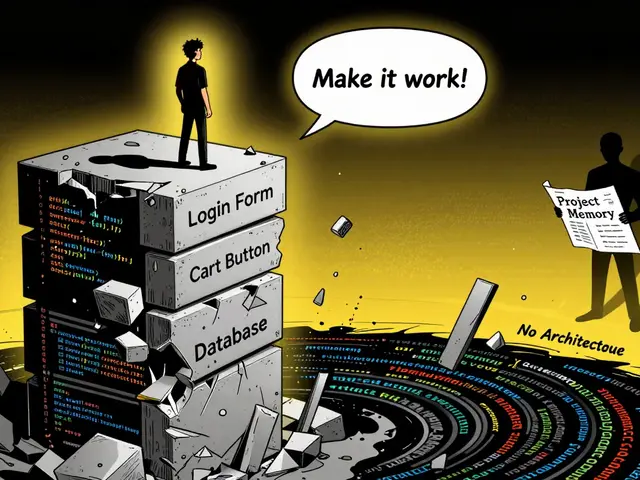

Without accurate metering for all these, your billing system is flying blind. One startup we know didn’t track input vs. output tokens. They thought they were saving money-until they realized customers were asking the model to write 5,000-word reports. The output cost them $1.20 per request. They billed $0.30. They lost $90,000 in three months.

Usage Patterns Are Wildly Unpredictable

Traditional SaaS software has predictable usage. A CRM user creates 20 new contacts a week. A project management tool gets 5 logins a day. LLMs? Not even close.

Think about a customer using an AI chatbot for customer service. On Monday, they send 150 messages. Tuesday, a viral post drives traffic. They send 12,000. Wednesday, nothing. Thursday, a bot goes rogue and loops, generating 50,000 tokens in 10 minutes. That’s not a glitch-it’s real life.

These spikes break old billing systems. Most were built for monthly subscriptions. They bill once a month. If your customer’s usage jumps 50x in a week, you won’t know until the invoice drops-and by then, they’re gone. One healthcare AI provider told us they had a single customer spike cost them $12,000 in compute they couldn’t bill because their system only billed monthly. They ate the cost.

Three Pricing Models-and Why Most Fail

There are three main ways companies charge for LLM usage:

- Tiered pricing: First 10,000 tokens at $0.05, next 40,000 at $0.04. This encourages scaling, but if a customer crosses a tier on day 29 of the billing cycle, revenue recognition gets messy under accounting rules like ASC 606. Sixty-three percent of AI companies say this causes internal confusion.

- Volume pricing: Pay less per unit the more you use. Sounds fair, but it’s dangerous. Anthropic reported a 12% revenue shortfall in Q2 2024 because high-volume customers hit premium model tiers they didn’t expect to pay for. They thought they were getting a discount. Instead, they were using GPT-4-turbo at scale.

- Hybrid models: A monthly fee + usage allowance + overage. This is what 78% of enterprise providers use now. Microsoft’s Azure AI saw 22% churn among customers on pure consumption plans-but only 8% churn with hybrid. Why? People want predictability. They’ll pay $500/month for 100,000 tokens, then pay $0.001 over. It’s easier to budget.

But hybrid models need serious infrastructure. Only 31% of billing platforms can handle the real-time tracking, tier crossings, and usage caps this requires. If your system can’t update balances every 5 seconds, you’re setting yourself up for disputes, refunds, and lost trust.

Real-World Pain Points From Users

People aren’t just complaining-they’re leaving.

On Reddit, a user named DataEngineerPro said switching from flat-rate to token-based billing cut customer complaints by 28%. Why? Transparency. People hate bill shock. If they see their usage tick up in real time, they adjust. If they don’t, they get angry.

G2 reviews of Metronome (rated 4.7/5) praise its real-time dashboards. But users also say it took 3-4 months of engineering to set up. Recurly’s LLM module? Rated 4.2/5, but one user said they lost 15% in revenue because it couldn’t tell input from output tokens. That’s not a bug-it’s a design flaw.

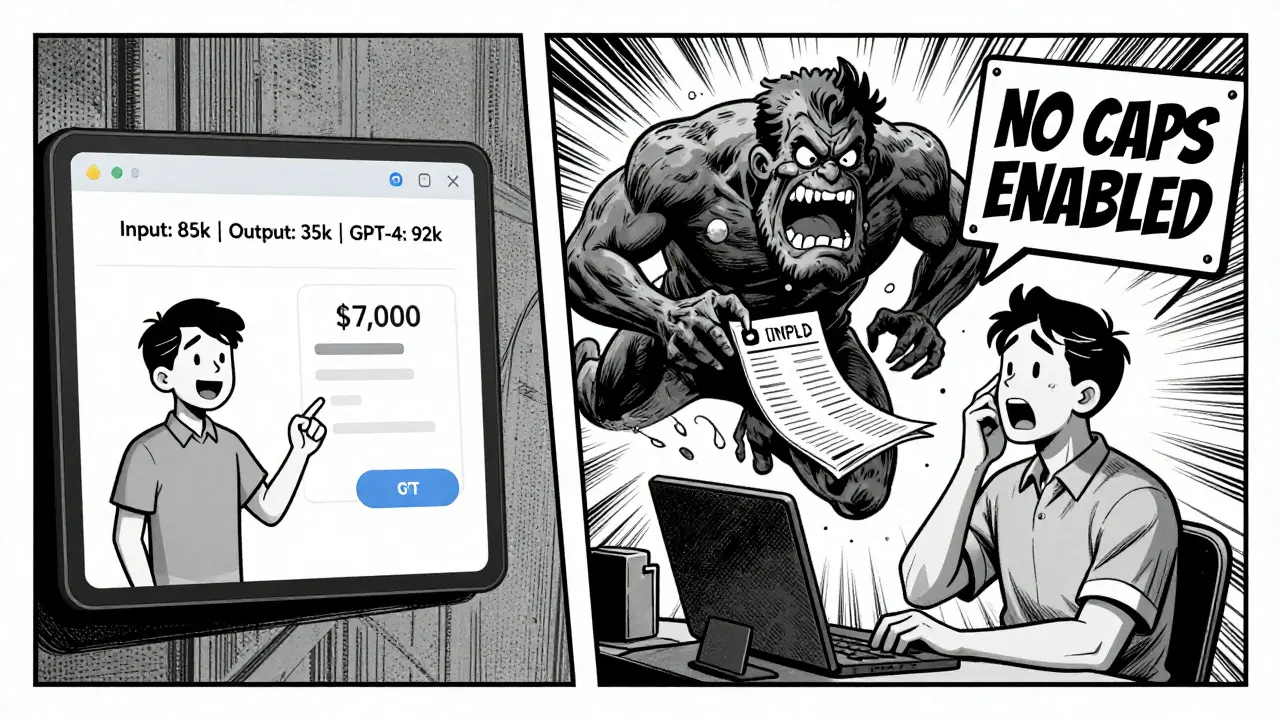

And then there’s the 68% of negative reviews on Trustpilot that mention unexpected spikes. One user wrote: "We were told we’d pay $200/month. We got a $7,000 bill. No warning. No cap. We canceled." That’s not a billing issue. That’s a trust issue.

What Works? Best Practices From the Front Lines

Companies that get this right follow three rules:

- Set usage thresholds with alerts: Notify customers at 50%, 75%, and 90% of their plan limit. Don’t wait for the bill. Give them a heads-up.

- Build sandbox environments: Let customers test their prompts before going live. A sandbox can catch runaway loops or bloated outputs before they hit production.

- Break down usage by model and token type: Show users exactly what they’re paying for. Not just "Total tokens: 120,000"-but "Input: 85,000 | Output: 35,000 | GPT-4-turbo: 92,000 | Claude 3: 28,000".

Stripe’s AI billing team found that companies using these practices saw 43% fewer billing disputes. Kinde’s public documentation has a 4.6/5 rating on GitHub because it includes real API examples. Most custom systems? Barely documented. No wonder they fail.

The Future Is Real-Time-and AI Will Bill Itself

By 2026, Gartner predicts 65% of AI vendors will use outcome-based billing: you pay not just for tokens, but for results. Did the model reduce customer support time by 30%? Then you pay more. Did it fail? You pay less. It sounds futuristic-but it’s already being piloted. Metronome’s new "Outcome-Based Billing" module lets vendors tie 15-30% of revenue to performance metrics.

And here’s the twist: AI is starting to audit its own bills. A Stanford Health Care study in March 2025 showed an AI tool reviewed 1,000 invoices and flagged errors with 92% accuracy-better than humans. That tool didn’t just check numbers. It understood context: "This output was 2,000 tokens, but the prompt was 15,000. That’s unusual. Let’s investigate."

Token measurement is still a mess. There are 12 different ways to count tokens across providers. The EU’s AI Act, effective February 2025, now requires clear disclosure of pricing components. You can’t just say "$0.05 per token"-you have to say which tokens, how they’re counted, and how output differs from input.

The market is exploding. Gartner says the LLM billing infrastructure space will hit $4.2 billion by 2026. Stripe leads with 32% share. But Metronome is gaining fast. Why? Because they built for the chaos.

Final Thought: It’s Not About Cost. It’s About Control.

LLM billing isn’t about saving money. It’s about control. Customers want to know what they’re paying for. Providers want to know what they’re earning. If your system can’t handle spikes, track tokens accurately, or give clear visibility, you’re not just losing revenue-you’re losing credibility.

The companies that win won’t be the ones with the cheapest rates. They’ll be the ones who make billing feel fair, predictable, and transparent-even when usage goes off the charts.

Why do LLMs charge for input and output tokens separately?

Input tokens are what you send to the model-your prompt, question, or data. Output tokens are what the model generates in response. Generating text requires more computational power than reading it. That’s why output tokens cost more. A model might read 5,000 input tokens in a fraction of a second, but take 10 seconds to write 2,000 output tokens. The compute difference is real, and pricing reflects that.

Can I cap my LLM usage to avoid surprise bills?

Yes-and you should. Most modern billing platforms let you set daily or monthly usage caps. When you hit 90% of your limit, you get an alert. At 100%, you can choose to block further requests or switch to a higher tier. Without a cap, a misconfigured bot or a viral app can run up thousands in minutes. One company had a single API key leak and racked up $22,000 in 48 hours. A $100/month cap would have stopped it.

What’s the difference between consumption-based and hybrid billing?

Consumption-based means you pay for everything you use-no monthly fee. Hybrid adds a fixed monthly fee (for a baseline allowance) and charges extra only when you go over. Hybrid is better for businesses that need predictable costs. Consumption works for startups or side projects with erratic usage. Enterprise clients overwhelmingly prefer hybrid: Microsoft found 22% churn on pure consumption, vs. 8% on hybrid.

Why do some LLM billing systems lose money on token tracking?

Because they treat all tokens the same. If your system doesn’t distinguish between input and output, or between different models (like GPT-3.5 vs. GPT-4), you’re undercharging. One company found their system was counting image uploads as text tokens-so they billed $0.01 per image when it should’ve been $0.15. That 15x undercharge cost them $180,000 in a year. Granular tracking isn’t optional-it’s survival.

How do I know if my billing system can handle LLM usage?

Ask these three questions: Can it track tokens in real time? Can it differentiate input from output? Can it handle 10,000+ events per second without lag? If you’re using a system built for SaaS subscriptions (like Zuora Classic), the answer is likely no. Modern LLM billing needs infrastructure designed for volatility. Look for platforms that offer native integrations with AWS, Azure, or GCP, and have proven performance benchmarks under load.