Choosing Between Building and Buying Generative AI

Most CIOs don’t realize it, but the biggest risk in deploying generative AI isn’t the technology-it’s picking the wrong approach. You can have the best model in the world, but if you build it when you should’ve bought it-or vice versa-you’ll waste months, millions, and momentum. The question isn’t whether to build or buy. It’s when to build, when to buy, and when to do both.

Why 95% of GenAI Pilots Fail

It’s not because the models are bad. It’s because organizations treat generative AI like a software upgrade instead of a strategic shift. EY found that 95% of GenAI pilot failures come from misaligned strategy, not technical flaws. A company might spend $3 million building a custom customer service bot, only to find out a $200,000 commercial tool handles 85% of queries with better accuracy. Or they might buy a ready-made tool for legal document review and discover it can’t interpret internal contracts because it wasn’t trained on their data. The problem isn’t the tool. It’s the mismatch between the business need and the deployment path.

The Three Paths: Buy, Boost, Build

MIT Sloan’s research broke this down into three clear options, not just two:

- Buy: Use a commercial platform like Azure OpenAI, Google Vertex AI, or Anthropic’s Claude Enterprise. No code. No training. Just plug in.

- Boost: Start with a commercial model, then fine-tune it with your proprietary data. Think of it as customizing a car you didn’t build.

- Build: Train your own model from scratch. This means hiring ML engineers, buying GPUs, managing data pipelines, and maintaining the system for years.

Most companies don’t need to build. OpenAI’s ChatGPT cost $10 million to train. Google’s Gemini cost $191 million. These aren’t investments for the average enterprise. They’re bets made by companies with billions in revenue and thousands of engineers.

When to Buy: Speed, Simplicity, and Low-Risk Use Cases

Buy when your goal is speed and the consequences of error are low. Examples:

- Automating draft emails for sales teams

- Generating product descriptions for e-commerce catalogs

- Summarizing meeting notes or HR onboarding documents

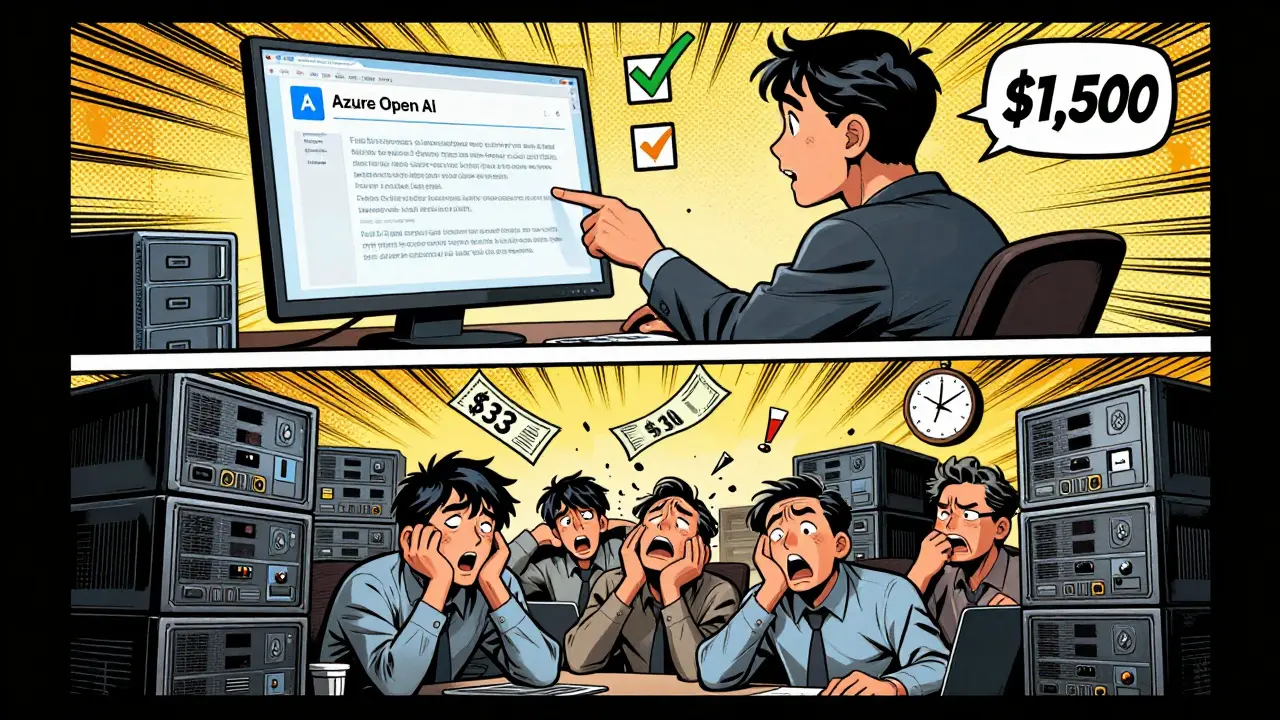

Commercial platforms like Microsoft Azure OpenAI deliver results in 2-8 weeks. You don’t need an AI team. You need a business analyst and an IT integrator. Gartner found that 72% of off-the-shelf tools cover 60-70% of enterprise needs right out of the box. That’s enough for 80% of use cases.

Cost-wise, Azure OpenAI charges $0.0001 per 1,000 input tokens and $0.0003 per 1,000 output tokens. For a company processing 5 million tokens a month, that’s $1,500-not $3 million. And you get SOC 2 compliance, GDPR support, and 99.9% uptime built in.

When to Boost: When You Need Customization Without the Heavy Lifting

Boosting is the sweet spot for most mid-sized enterprises. You start with a proven model-like GPT-4 or Claude 3-and then train it on your internal data: contracts, support tickets, product manuals, compliance policies.

For example, a bank might buy Anthropic’s Claude Enterprise, then feed it 10 years of loan approval notes. The model learns how their underwriters think. It doesn’t need to be trained from scratch. It just needs to understand their language.

Implementation time drops to 30-45% of a full build. Costs go up slightly-because you’re using more compute to fine-tune and run the model-but you avoid the $2.5-$3.5 million annual salary bill for a team of 15-20 AI specialists. Writer.com’s case studies show boosted models deliver 80% of the accuracy of custom builds at 40% of the cost.

When to Build: High-Stakes, Unique, or Proprietary Workflows

Build only if your business depends on something no one else has-and the cost of getting it wrong is catastrophic.

- Healthcare diagnostic models trained on rare disease scans

- Financial risk models that predict defaults using non-public market signals

- Manufacturing quality control systems that detect micro-defects invisible to humans

Deloitte’s framework says if an error costs more than $500,000, you should consider building. If it costs over $1 million, you almost certainly should.

But here’s the catch: building means you’re now running a research lab. You need:

- 2,000+ A100 GPUs (cost: $20-30 million)

- Specialized data engineers to clean and label your training data

- ML engineers to tune the model

- MLOps teams to monitor for drift and bias

- Security teams to audit everything

And even then, 68% of organizations exceed their budget by 40% or more. EY’s 2024 survey found 63% of custom builds had security gaps during rollout. And 63% lost key AI staff within 18 months.

Real-World Trade-Offs: Time, Cost, Control

Here’s how the three options compare across key dimensions:

| Factor | Buy | Boost | Build |

|---|---|---|---|

| Time to Deploy | 2-8 weeks | 6-12 weeks | 6-12 months |

| Upfront Cost | $50K-$200K | $200K-$800K | $10M-$50M+ |

| Annual Maintenance | $100K-$500K (usage-based) | $500K-$1.5M | $1.8M-$3.5M |

| Customization Level | Low | Medium | Full |

| Security Compliance | Out-of-the-box (SOC 2, GDPR) | Out-of-the-box + your data controls | You build it from scratch |

| Support & SLAs | 24/7, 99.9% uptime guaranteed | Same as Buy | Internal team, 8+ hour response time |

| Competitive Advantage | Low | Medium | High (if done right) |

Notice something? The "Buy" option wins on speed, cost, and reliability. The "Build" option wins only if you’re creating something no one else can replicate-and you have the resources to protect it.

Hybrid Is the New Default

Companies that win aren’t choosing one path. They’re using all three. IDC reports that 45% of enterprises now use hybrid models. MIT CISR calls it "composable AI": buy the foundation, boost where it matters, build only where you need unique advantage.

For example:

- Use Azure OpenAI to auto-generate customer service replies (Buy)

- Boost it with your internal warranty policy documents to improve accuracy (Boost)

- Build a custom model that predicts equipment failure from sensor data-because that’s your core product (Build)

Microsoft’s November 2024 update to Azure OpenAI Studio lets you fine-tune GPT-4 with your data while keeping it locked inside your enterprise environment. Anthropic’s December 2024 "Claude Enterprise Custom" lets you run a dedicated model instance just for your team. The market is no longer about choosing between build or buy. It’s about choosing the right mix.

What CIOs Get Wrong

Three mistakes keep coming up:

- "We need our own AI to stay competitive." Most companies don’t need to own the model. They need to own the outcome. Amazon’s supply chain AI saved $2.1 billion-but they didn’t build the base model. They used AWS and trained it on their logistics data.

- "We’ll build it later if we need more control." By the time you’re ready to build, you’ve already wasted 18 months and $4 million on a custom bot that does 70% of what a $50K tool could’ve done.

- "We’re too regulated to buy." Google’s Vertex AI and Microsoft’s Azure OpenAI now include 47 pre-certified compliance frameworks. In finance and healthcare, buying is often faster and safer than building your own compliance stack.

How to Decide: A Simple Framework

Use this checklist before you spend a dollar:

- What’s the cost of failure? If an error costs less than $100,000 → Buy. If it’s $500,000+ → Consider Build.

- Is the task standardized? If yes (e.g., drafting emails, summarizing reports) → Buy. If it’s messy, context-heavy, or unique → Boost or Build.

- Do you have proprietary data? If you have unique documents, internal processes, or customer patterns → Boost. If you don’t → Buy.

- Can you afford the team? If you don’t have 15+ AI specialists on payroll → Don’t build.

- How fast do you need results? If you need it in 30 days → Buy or Boost. If you have 12+ months → Then consider Build.

Most teams can answer these in a single meeting. Yet most wait months, then hire consultants to "help them decide." By then, they’ve already sunk $1 million into a project that should’ve been a $50K subscription.

Where the Market Is Headed

By 2027, Gartner predicts 60% of current GenAI vendors will disappear. The winners will be the big cloud platforms-Microsoft, Google, AWS-because they can afford to keep innovating. They’ll release 10-15 updates a year. Your custom build? It’ll be stuck on a 2024 model, with no one left to maintain it.

Meanwhile, custom AI will become more specialized. You won’t see companies building general-purpose chatbots anymore. You’ll see hospitals building diagnostic assistants. Banks building fraud detection engines. Manufacturers building quality inspectors. These aren’t AI projects. They’re business-critical systems.

Final Thought: It’s Not About AI. It’s About Strategy.

Generative AI isn’t a tool. It’s a lever. And like any lever, you don’t need to build the fulcrum to use it effectively. You just need to know where to place it.

Buy for speed. Boost for precision. Build only when your advantage depends on something no one else can replicate-and you’re willing to pay the price to protect it.

Is it cheaper to build or buy a generative AI platform?

For 95% of organizations, buying is cheaper. Building a custom model requires $20-50 million in upfront hardware, plus $2.5-$3.5 million annually in salaries for a team of 15-20 specialists. Buying a commercial platform like Azure OpenAI costs $50K-$500K annually, depending on usage. Even boosting a commercial model with your data is typically 40-60% cheaper than building from scratch.

Can I build a custom AI model without a big team?

No. Training a reliable, enterprise-grade generative AI model requires a full-stack AI team: data engineers, ML engineers, MLOps specialists, security experts, and compliance officers. You can’t outsource this. Even open-source models like Llama 3 require deep technical expertise to deploy securely at scale. If you don’t have this team, don’t build.

What’s the biggest risk when buying a commercial AI platform?

Vendor lock-in and unexpected usage costs. Many commercial platforms charge per token, and usage can spike unexpectedly-especially if employees start using the tool heavily. A single department using an AI for daily reports could burn through $10,000 in a month. Always set usage caps, monitor spend, and negotiate fixed-price enterprise contracts when possible.

Does using a commercial AI platform mean I lose control of my data?

Not if you choose the right vendor. Microsoft Azure OpenAI, Google Vertex AI, and Anthropic’s Enterprise offerings now let you train and run models inside your own cloud environment. Your data never leaves your network. Look for "private inference," "dedicated instances," and "on-prem deployment" options. Avoid any vendor that says "we train on your data to improve our model" unless you’re okay with that.

Should I use open-source models like Llama 3 or Mistral?

Only if you have a large AI team and a specific need that commercial vendors don’t meet. Open-source models are free to use but require heavy infrastructure, security hardening, and ongoing maintenance. Most enterprises spend more on managing them than they save on licensing. For 90% of use cases, commercial platforms with enterprise support are the smarter choice.

How do I know if my use case is worth building?

Ask: If we didn’t have this AI, would we still need to solve this problem? And if we solved it manually, would it cost more than $500,000 per year? If yes, and your data is unique, and your competitors can’t replicate it-then building might make sense. If not, buy or boost.

6 Comments

Jasmine Oey

OMG I just read this and I’m literally crying. Like, who even thinks building is an option anymore?? It’s 2025, not 2018. We’re not in the Stone Age of AI where you had to carve your own models out of granite. Azure OpenAI is literally cheaper than my monthly coffee subscription and it’s smarter than half the people in my org. Why are we still having this conversation??

Marissa Martin

I appreciate the data, but I can’t help but feel like this ignores the moral cost of vendor lock-in. We’re outsourcing our strategic thinking to corporations that don’t care about our industry, our ethics, or our future. Just because it’s cheap doesn’t mean it’s right. What happens when they change the terms? When they stop supporting your model? We’re trading autonomy for convenience-and history always punishes that.

James Winter

Buy? Are you kidding? Canada’s got better tech than this. We don’t need American cloud giants telling us how to run our business. If you can’t build your own AI, you don’t deserve to have one. Stop being lazy. Build it. Or get out of tech.

Aimee Quenneville

So… we’re supposed to believe that buying is the ‘smart’ choice… but then the article says ‘hybrid is the new default’? 🤔 Like, wow. Groundbreaking. I guess ‘buy, boost, build’ is just ‘do all three and hope for the best’ with a fancy name. Also, who wrote this? It reads like a Microsoft sales deck that got drunk at a conference and started quoting MIT. I’m confused. And also… kinda impressed??

Cynthia Lamont

Let’s be real. This whole post is just corporate fluff dressed up like wisdom. You say ‘buy for speed’ but then admit 63% of custom builds have security gaps? That’s not a reason to buy-that’s a reason to fire your security team. And ‘boost’? That’s just ‘buy and then pay extra to make it work.’ And you call that innovation? Please. The only thing being optimized here is the vendor’s revenue. Also, ‘$1,500/month’? That’s what you pay for 5 million tokens? What if you hit 50 million? You’re just hiding the real cost behind a pretty spreadsheet. This isn’t strategy. It’s denial.

Kirk Doherty

I’ve seen this play out three times now. Every time someone says ‘we’ll build later’ they end up stuck with a $4M ghost project and a team that quit. Buy first. Always. Then think about boosting. Build only if you’re ready to become a tech startup.