Why LLM Training Can Leak Your Data

Large Language Models don’t just learn patterns-they memorize. When you feed a model customer emails, medical records, or internal chat logs, it doesn’t just summarize them. It stores fragments of those texts in its weights. And if someone knows what to ask, they can pull out exact sentences from your training data. This isn’t theoretical. In 2024, a major healthcare provider leaked patient names and diagnosis codes after training an LLM on unredacted EHRs. The model reproduced full sentences from real patient notes when prompted with partial identifiers. That’s not a bug. It’s how these models work.

Regulators noticed. The European Data Protection Board (EDPB) issued new guidance in April 2025 stating that LLMs trained on personal data without safeguards violate GDPR. Fines up to 4% of global revenue are no longer a threat-they’re happening. In Q3 2024, the European Commission fined two tech firms over $200 million each for training models on scraped personal data without consent.

What Exactly Is PII in LLM Training?

PII isn’t just names and Social Security numbers. In enterprise contexts, it’s anything that can identify a person, directly or indirectly. That includes:

- Full names, addresses, phone numbers

- Medical record numbers, insurance IDs, diagnosis codes (PHI under HIPAA)

- Employee IDs, payroll details, performance reviews

- Customer account numbers, transaction histories, support ticket transcripts

- Even seemingly harmless details like job titles combined with company names can be re-identifying when cross-referenced with public data

One company in manufacturing thought they were safe because they removed names from their internal emails. But their model still reproduced exact phrases like “Sarah from Procurement approved the $87K order on 2024-06-15.” Sarah’s name wasn’t in the dataset-but the unique combination of role, amount, and date was enough to identify her. That’s why context-aware redaction matters more than keyword lists.

Three Ways to Protect Data in Training Pipelines

There are three main approaches to securing LLM training data, each with trade-offs:

1. Differential Privacy (DP-SGD)

This is mathematically proven protection. It adds noise to the training process so the model can’t learn individual data points with certainty. Tools like Opacus (for PyTorch 1.13.1) make this accessible. But here’s the catch: the more privacy you add, the less accurate the model becomes.

- At ε=2 (high privacy): Accuracy drops 15-20%

- At ε=8 (enterprise standard): Accuracy drops 3-5%

Dr. Cynthia Dwork, who invented differential privacy, says ε≤8 provides “meaningful protection” for most enterprise uses. But Stanford HAILab found that even at ε=8, rare data points-like a single patient with a rare disease-can still be extracted using thousands of targeted prompts. So DP-SGD protects the majority, but not all.

2. AI-Driven PII Redaction (Clio-Style)

Anthropic’s Clio system uses AI to scan training data before it’s fed into the model. It doesn’t add noise-it removes. Using deep learning models trained to spot 150+ PII types, it flags and replaces names, IDs, and sensitive phrases with tokens like [PATIENT_ID] or [ACCOUNT_NUMBER].

Sigma.ai’s September 2024 tests showed these systems achieve 95-98% precision on real-world enterprise data. Clio 2.0, released in December 2025, hits 99.7% accuracy on protected health information (PHI). The big advantage? Model accuracy stays within 1-3% of the original. No trade-off. But there’s a downside: no mathematical guarantee. If the redaction model misses a pattern, the data leaks. That’s why you need validation.

3. Hybrid Systems (Best Practice)

The most reliable approach combines both. Use AI redaction to strip out obvious PII, then apply differential privacy during training. This is now the industry standard. Provectus’s December 2024 analysis of enterprise deployments found hybrid systems reduced PII incidents by 95% while keeping model performance above 94% of baseline accuracy.

For example, a Fortune 500 bank used Clio to redact customer names and account numbers from call center transcripts, then applied DP-SGD (ε=8) during training. Their internal audit showed PII leakage dropped from 37 incidents per month to 2. They also kept their fraud detection model’s accuracy at 92.3%, just 1.1% below the unredacted version.

Tools You Can Use Today

You don’t need to build this from scratch. Here are the top tools in production:

Microsoft Presidio

An open-source PII detection engine integrated into Azure AI. It supports 30+ entity types and lets you train custom detectors. Enterprises rate it 4.2/5 on G2. Users love its flexibility but complain about the learning curve. One data engineer said, “It took six weeks just to tune it for our internal jargon.”

AWS Clean Rooms

Launched in Q2 2024, this lets you train models on sensitive data without ever exposing the raw data. It uses secure enclaves and federated learning. Pricing is $0.45 per million tokens processed. Good for regulated industries, but limited to AWS users.

Anthropic Clio 2.0

Not public, but used by enterprise clients. It’s now the gold standard for healthcare and finance. Its December 2025 update added real-time redaction for voice transcripts and scanned documents, achieving 99.7% PHI detection accuracy.

What Goes Wrong in Real Implementations

Most failures aren’t technical-they’re procedural.

- Synthetic data gone wrong: A hospital generated fake patient records to train an LLM. Their synthetic data included realistic combinations of age, zip code, and treatment history. When cross-referenced with public voter rolls, 12% of “synthetic” records matched real people. They didn’t validate against a “canary set” of known PII.

- Over-reliance on regex: One company used simple regex to remove email addresses. But it missed “j.smith@company[dot]com” or “john dot smith at company dot com.” Their model later reproduced 47 real emails from training data.

- Ignoring metadata: Timestamps, IP addresses, and device IDs in logs can be used to re-identify individuals. One logistics firm didn’t scrub timestamps from delivery logs. Their model learned that “delivery to 987 Oak St at 3:15 PM on Tuesday” meant “John Doe’s house.”

The most common mistake? Thinking redaction is a one-time task. PII detection needs constant tuning. As language changes-new slang, nicknames, internal acronyms-the model needs retraining.

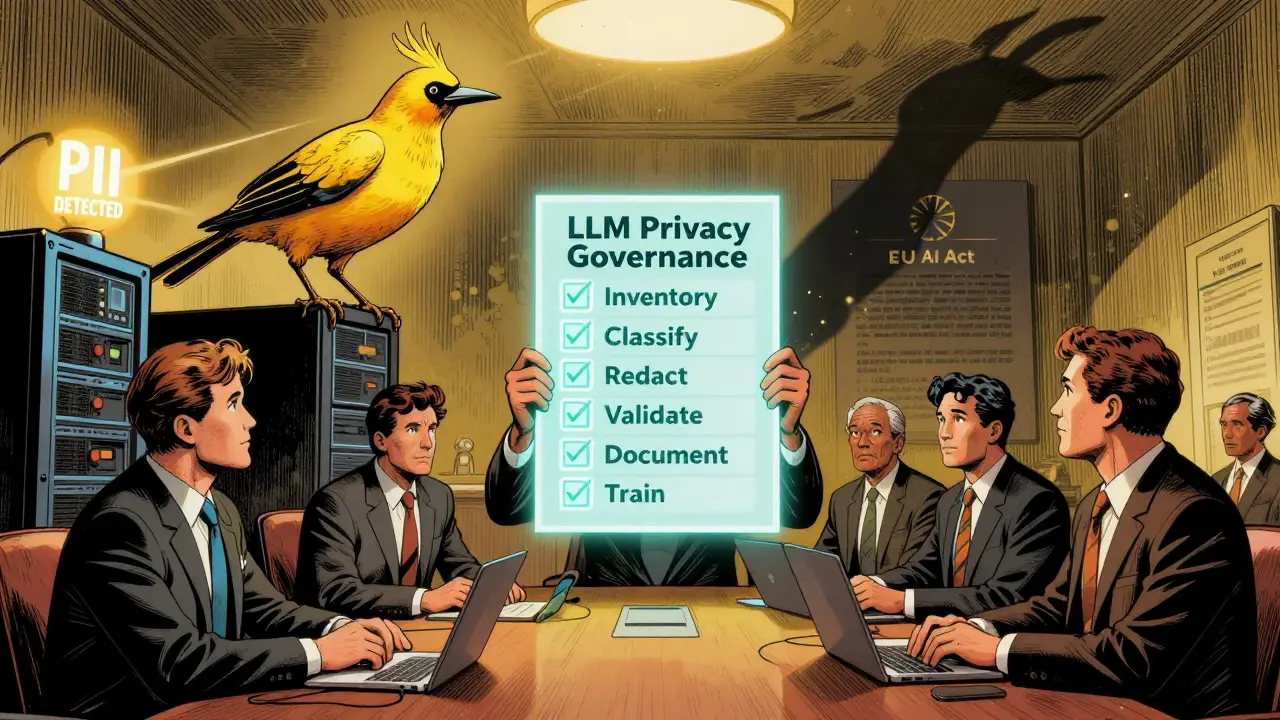

How to Build a Governance Framework

Privacy isn’t just a tool. It’s a process.

- Inventory your data: Map every source feeding into your LLM pipeline-emails, support tickets, logs, documents. GDPR Article 30 requires this. Most companies take 4-8 weeks to complete it.

- Classify by sensitivity: Label data as public, internal, confidential, or restricted. Only restricted data needs redaction.

- Choose your method: Hybrid is best. Use AI redaction first, then DP-SGD.

- Validate with canaries: Insert known PII patterns into your training data. After training, test if the model can reproduce them. If yes, your redaction failed.

- Document everything: Keep logs of what data was used, when it was redacted, who approved it, and which tools were used. Audit trails are mandatory under the EU AI Act (effective August 2026).

- Train your team: Data engineers need 3-6 months to master these tools. LinkedIn Learning’s 2025 report shows 87% of LLM privacy job postings require NLP skills and regulatory knowledge.

Where This Is Headed

By 2026, NIST’s AI Risk Management Framework 2.0 will introduce standardized tests for LLM privacy. Companies will be scored on how well their models resist extraction attacks. The EU AI Act will make compliance mandatory for high-risk systems.

But the fundamental tension remains: more privacy = less accuracy. Dr. Dawn Song put it bluntly at NeurIPS in December 2025: “We cannot simultaneously maximize model accuracy and privacy; the art lies in finding the optimal tradeoff for each specific use case.”

That’s the reality. You won’t get perfect privacy. But you can get good enough. And for most enterprises, that’s enough to avoid fines, protect customers, and keep building.

What You Should Do Right Now

If you’re training LLMs on sensitive data:

- Stop using raw data without scanning it

- Test your model with a canary set-try to extract PII using prompts

- Start with Microsoft Presidio or AWS Clean Rooms if you need a quick start

- Build a redaction pipeline before you train your next model

- Train your team. This isn’t a one-time fix-it’s a new skill set

PII redaction isn’t optional anymore. It’s the baseline. The companies that survive the next wave of AI regulation won’t be the ones with the most powerful models. They’ll be the ones that protected their data first.

Can I just delete names from my training data and call it a day?

No. Simple keyword deletion fails because LLMs learn patterns, not just words. A model can still identify someone from their job title, location, and the timing of events-even without their name. For example, “Sarah from Procurement approved the $87K order on 2024-06-15” can still be extracted. You need context-aware AI redaction that understands relationships between entities, not just lists of forbidden words.

Is differential privacy enough on its own?

Differential privacy (DP-SGD) gives you mathematical guarantees, but it reduces model accuracy. At ε=8, you lose 3-5% performance-acceptable for many applications. But Stanford HAILab found that rare data points (like unique medical cases) can still be extracted with enough adversarial prompts. For maximum protection, combine DP-SGD with AI-based redaction. That’s the industry best practice.

What’s the difference between Clio and Presidio?

Clio is Anthropic’s proprietary AI system designed for enterprise-scale LLM training. It uses deep learning to detect and redact PII with 99.7% accuracy on PHI and is optimized for high-volume, sensitive data like healthcare and finance. Presidio is Microsoft’s open-source tool that lets you build custom PII detectors. It’s flexible and free, but requires more setup and NLP expertise. Clio is a turnkey solution; Presidio is a toolkit.

Can I use synthetic data to avoid privacy issues?

Only if you validate it. Many companies generate synthetic data thinking it’s safe. But if the synthetic data mirrors real patterns too closely-like combining rare conditions with zip codes or job titles-it can still re-identify individuals. A healthcare provider in 2024 found 12% of their synthetic records matched real people when cross-referenced with public data. Always test synthetic data against a known PII “canary set” before training.

How long does it take to implement PII redaction?

It depends. Building a full data inventory and classification policy takes 4-8 weeks for a medium-sized company. Setting up and tuning a redaction system like Presidio or Clio takes another 6-12 weeks. Most organizations need 3-5 rounds of testing and tuning before they get acceptable accuracy and privacy balance. Expect a 3-6 month timeline to go from zero to production-ready.

What skills does my team need to handle LLM privacy?

Your team needs three key skills: NLP expertise to build and tune PII detectors, knowledge of privacy frameworks like DP-SGD and k-anonymity, and understanding of regulations like GDPR and HIPAA. According to Indeed’s Q4 2024 analysis, 87% of LLM privacy job postings require all three. If your team lacks these, invest in training-LinkedIn Learning’s 2025 report shows it takes 3-6 months to become proficient.

7 Comments

vidhi patel

The notion that differential privacy alone is sufficient for enterprise-grade LLM training is not merely optimistic-it is dangerously negligent. At ε=8, the mathematical guarantees are statistically meaningless when adversarial extraction attacks can isolate rare data points with targeted prompts. Stanford HAILab’s findings are not anomalies; they are inevitable outcomes of under-engineered privacy controls. The only responsible approach is hybrid: AI redaction as the first line of defense, DP-SGD as the final, non-negotiable layer. Anything less is a liability waiting for a regulator’s subpoena.

Priti Yadav

lol so now they’re saying we can’t even train AI on our own emails without a PhD in math and a $20M budget? 😂 Meanwhile, the same companies that built this mess are still scraping our social media, medical records, and private texts like it’s 2012. They’re not protecting us-they’re just making us pay more to pretend they care. Clio? Presidio? Please. This is all just corporate theater. The real PII leak? The fact that we still trust these companies at all.

Ajit Kumar

It is imperative to recognize that the fundamental flaw in most organizational approaches to LLM data governance lies not in the tools, but in the cognitive dissonance between policy and practice. The assertion that removing names constitutes adequate redaction is not only incorrect-it is emblematic of a systemic failure in data literacy across engineering teams. Contextual re-identification is not a hypothetical edge case; it is a deterministic outcome when semantic patterns are preserved. Furthermore, the reliance on regex for email obfuscation-despite its documented inadequacy-is not a technical oversight; it is a cultural one. Organizations must institutionalize validation protocols, including canary sets, as mandatory checkpoints-not optional best practices. The EU AI Act will not forgive ignorance; it will penalize it. And those who dismiss this as overregulation are, in effect, choosing obsolescence over accountability.

Diwakar Pandey

Really appreciate this breakdown. I’ve seen too many teams think ‘we scrubbed the names’ and call it done. The Sarah-from-Procurement example? That’s the one that sticks with me. We had the same thing happen last year with delivery logs-timestamps + locations = instant ID. Started using Presidio after this, and honestly, it was a pain to tune for our internal jargon, but worth it. Also, the hybrid approach? Yeah, we tried DP-SGD alone first, lost 18% accuracy on our fraud model. Now we redact first, then add noise. Accuracy down only 1.2%. No fines, no panic. Just quiet, boring, compliant engineering. That’s the win.

Geet Ramchandani

Let’s be brutally honest: this entire post is a luxury for companies with legal teams and budgets. Most startups? They’re training models on scraped Slack archives and customer support exports because ‘we don’t have time.’ And guess what? They’re already getting fined. The EDPB isn’t waiting. The $200M fines aren’t warnings-they’re the new normal. The ‘hybrid approach’ sounds great on paper, but who’s actually got the bandwidth to implement it? The people writing this? Probably not. They’re consultants selling tools. Meanwhile, the engineers on the ground are still using regex because their manager said ‘just fix it by Friday.’ This isn’t a technical problem. It’s a systemic collapse of accountability. And no, Clio won’t save you if your CTO thinks ‘PII’ is a type of coffee.

Pooja Kalra

There is a quiet violence in the way we treat data-as if it were a resource to be consumed, not a reflection of human lives. We speak of ‘redaction’ as if it were a technical fix, when in truth, it is an ethical reckoning. The model does not ‘memorize’-it internalizes. And when it reproduces a patient’s diagnosis or an employee’s salary, it is not malfunctioning. It is mirroring the indifference of the systems that fed it. We build tools to obscure, but we do not build cultures to respect. The real question is not how to scrub data-but whether we are willing to stop harvesting it at all.

Sumit SM

Wait-so you’re telling me that if I use synthetic data, I still have to validate it against a canary set? And if I use regex to remove emails, I’m basically inviting a data breach? And if I skip the inventory phase, I’m violating GDPR Article 30? And if I don’t train my team in NLP + regulations, I’m doomed? And if I don’t combine AI redaction with DP-SGD, I’m not ‘good enough’? And if I don’t document everything, I’m going to get fined? And if I don’t do all this before my next model train, I’m basically a criminal? Okay. Okay. Okay. I’m just… I’m just going to go sit in my car and cry now. This is too much. I just wanted to build a chatbot. Why is everything so complicated now?!!