Human Review Workflows are a critical methodology for ensuring accuracy in high-stakes AI applications. This approach, known as Human-in-the-Loop (HITL), integrates human expertise with AI systems to validate model outputs where errors could have serious consequences.

Imagine an AI system generating a medical diagnosis that's wrong. A single mistake could be fatal. That's why human review workflows aren't optional-they're essential for high-stakes applications where accuracy matters.

In healthcare, legal, and finance, a single error can lead to catastrophic outcomes. For example, an AI misdiagnosing a tumor could delay treatment. In law, incorrect legal advice might cost millions. Financial institutions face regulatory fines for faulty risk assessments. Human review workflows address these risks by inserting expert human judgment into the AI process.

According to John Snow Labs' 2024 documentation, HITL workflows emerged as a necessary evolution when industries demanded 'regulatory-grade accuracy' that pure AI systems couldn't consistently deliver. Their research shows these workflows can reduce critical errors by 60-80% while maintaining efficiency.

For instance, a healthcare documentation specialist using John Snow Labs' system reported reducing errors from 12% to 3.5% in just two weeks. That's because the system allows reviewers to see exactly where corrections were made, with detailed comments on each label.

Core Technical Components of Modern HITL Systems

Modern human review workflows aren't just about adding humans to the loop-they're sophisticated systems with four key components. First, task management systems assign work to domain experts based on their skills. Second, audit trails capture every modification with millisecond precision, creating a complete history of changes. Third, custom review workflows use Boolean logic rules to route tasks efficiently. Fourth, versioning systems maintain lineage of all annotations, so you can track how a document evolved.

Amazon SageMaker takes a different approach. Their three-step methodology starts with supervised fine-tuning using labeled data. For example, they fine-tuned Mistral-7B on 436,207,616 parameters (5.68% of total) before collecting user feedback. Next, they implement reinforcement learning from human feedback (RLHF), where human evaluations shape the rewards function. Finally, for scale, they use RLAIF-Reinforcement Learning from AI Feedback-where another LLM generates evaluation scores to reduce subjectivity.

How Different Platforms Implement HITL Workflows

| Platform | Key Features | Best For |

|---|---|---|

| John Snow Labs | Task management with metadata-specific corrections, full audit trails, versioning systems | Healthcare compliance, regulatory-grade accuracy |

| Amazon SageMaker | Supervised fine-tuning, RLHF, RLAIF for automated feedback | Scalable AI training with reduced human workload |

| RelativityOne | Document-level analysis with natural language explanations | Legal document review, citation accuracy |

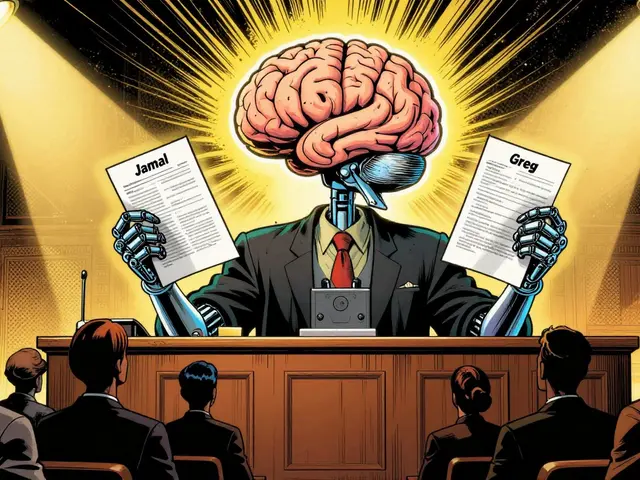

John Snow Labs' approach excels in healthcare applications requiring regulatory compliance, with documented 22.8% higher accuracy (0.8100 vs 0.6419 semantic similarity scores) compared to traditional RAG pipelines when validated against 274 human-verified samples. Amazon's RLAIF implementation demonstrated an 8% improvement in AI feedback scores while reducing validation workload for subject matter experts by an estimated 80% in the Amazon EU Design and Construction pilot project. However, RelativityOne's aiR for Review system struggles with maintaining contextual continuity across document boundaries in complex legal cases, requiring manual checks that add 15-20% to review time initially.

Real-World Success Stories and Common Pitfalls

When healthcare documentation specialists use John Snow Labs' system, they report seeing exactly where Jane corrected their annotation through the Versions tab. This specific feedback reduced their error rate from 12% to 3.5% in two weeks. Gartner's February 2025 survey of 142 professionals found that 87% consider 'seeing specific corrections with metadata explanations' the most valuable aspect of HITL workflows. But inconsistent feedback quality between reviewers remains a top complaint-68% of healthcare implementations face this issue according to a 2025 HIMSS survey.

Legal reviewers using RelativityOne note that the system sometimes generates plausible but incorrect citations. While this speeds up initial reviews, it requires careful verification. Meanwhile, Amazon's EU Design project reported 43% faster information retrieval from unstructured documents after implementing the RAG pipeline with fine-tuned Mistral-7B. These examples show the balance between efficiency gains and the need for human oversight.

Practical Steps to Build Your Own Workflow

Starting a human review workflow isn't complicated, but it needs careful planning. First, define clear review criteria. What exactly should humans check? For healthcare, this might be drug interactions; for legal, it could be citation accuracy. Second, calibrate your team. John Snow Labs recommends 8-12 hours of training for domain experts. Third, start small. Amazon suggests beginning with 100-200 high-quality samples for supervised fine-tuning. Fourth, integrate feedback loops. Use RLHF or RLAIF to let human input improve the model automatically. Finally, monitor consistency. A HIMSS 2025 survey found that 68% of healthcare implementations struggled with inconsistent reviews-calibration sessions where 5-10% of documents are reviewed by multiple experts can reduce this from 22% to 7% disagreement.

Regulatory and Market Trends Driving Adoption

The global HITL market hit $2.3 billion in 2025 and is growing at 34.7% annually-faster than the overall AI market. Healthcare leads with 38.2% market share, driven by FDA requirements for human oversight in diagnostic tools. The EU AI Act, effective February 2026, mandates 'human oversight mechanisms' for high-risk systems. Meanwhile, the FDA's 2025 guidance specifies that human reviewers must understand, assess, and override AI decisions. IDC reports 78% of Fortune 500 companies now use some form of human review for critical LLM applications, up from 32% in 2023. This surge comes after high-profile AI failures, like a 2024 medical AI misdiagnosing 15% of cancer cases due to lack of human checks.

The Future of Human-AI Collaboration in Critical Systems

Looking ahead, human review workflows are getting smarter. John Snow Labs is developing 'context-aware feedback routing' that directs specific errors to specialized reviewers. Beta tests show 18% faster review cycles in healthcare tasks. Amazon plans to automate continuous learning by connecting with their engineering data infrastructure. But experts warn against over-automation. Dr. Emily Wong, a healthcare AI ethicist at Johns Hopkins, cautions in her 2025 NEJM publication: 'Over-reliance on AI-assisted review without proper human oversight can create false confidence, especially in edge cases where both human and AI may fail.' The key is balance-using AI to handle routine checks while humans focus on complex decisions. As NIH's January 2026 report notes, 'Human review workflows must evolve to handle multimodal outputs like images and audio from LLMs while maintaining regulatory compliance.'

What's the difference between RLHF and RLAIF?

RLHF (Reinforcement Learning from Human Feedback) uses direct human evaluations to train AI models. For example, humans rate responses on quality or safety, and those scores adjust the model's behavior. RLAIF (Reinforcement Learning from AI Feedback) uses another LLM to generate evaluation scores instead of humans. This reduces subjectivity from a small group of SMEs and speeds up feedback loops. Amazon uses RLAIF to cut validation workload by 80% while maintaining quality, making it ideal for large-scale deployments.

How much human review time is needed for a typical workflow?

It depends on the use case. For healthcare documentation, John Snow Labs recommends 8-12 hours of training for domain experts. Amazon's Mistral-7B model required 20 epochs of training on a p3.8x node to converge. However, once set up, automated feedback loops like RLAIF can reduce ongoing human workload by up to 80%. Initial setup takes more time, but the long-term savings are significant.

What are common pitfalls in implementing HITL workflows?

The biggest pitfalls are inconsistent feedback quality between reviewers and unclear review criteria. A 2025 HIMSS survey found 68% of healthcare implementations struggled with inconsistent reviews. Another issue is over-reliance on AI-generated feedback without human validation, which can create false confidence in system accuracy. Finally, failing to calibrate reviewers regularly leads to higher error rates-calibration sessions where 5-10% of documents are reviewed by multiple experts can reduce disagreement from 22% to 7%.

How do regulatory requirements affect HITL design?

Regulations like the EU AI Act (effective February 2026) mandate 'human oversight mechanisms' for high-risk AI systems. The FDA requires human reviewers to understand, assess, and override AI decisions in medical devices. This means HITL workflows must include clear audit trails, versioning, and documentation of human decisions. Non-compliance can result in fines or bans. For example, healthcare AI systems without proper human review protocols face FDA rejection during approval.

Can these workflows be automated further?

Yes, but with caution. Amazon is automating continuous learning by connecting with their engineering data infrastructure. John Snow Labs' context-aware feedback routing directs specific errors to specialized reviewers, speeding up processes. However, over-automation risks undermining the human oversight these systems were designed for. As Wiley's 2025 publication warns, 'The human reviewer plays a critical role in providing feedback to calibrate the LLM.' The key is using AI to handle routine checks while keeping humans in the loop for complex decisions.

What industries benefit most from human review workflows?

Healthcare leads with 38.2% market share due to FDA requirements for diagnostic tools. Legal services (27.5%) use them for document review and citation accuracy. Financial services (19.8%) apply them to risk assessments and fraud detection. These fields have high consequences for errors and strict regulatory oversight. Emerging uses include manufacturing quality control and autonomous vehicle safety systems, where AI decisions must be validated before deployment.

How do you ensure consistency among multiple reviewers?

Calibration sessions are key. John Snow Labs reports that reviewing 5-10% of documents with multiple experts reduces inter-reviewer disagreement from 22% to 7%. Standardized training materials and clear criteria also help. For example, healthcare documentation specialists use the same reference cases during training. Regular feedback loops where reviewers discuss discrepancies improve consistency over time. Amazon's RLHF process includes human evaluations to adjust model behavior, which indirectly helps standardize reviewer feedback.

What's the role of versioning systems in HITL workflows?

Versioning systems maintain a complete history of all annotations and changes. This allows reviewers to track how a document evolved, see who made corrections, and revert to previous versions if needed. John Snow Labs' system uses versioning to show exactly where Jane corrected an annotation, which reduced error rates by 7.5 percentage points. In legal cases, versioning ensures compliance with audit requirements and helps identify patterns in errors. Without versioning, it's impossible to trace the root cause of mistakes or prove regulatory compliance.