Why bias in large language models isn't just a technical problem

When you ask a large language model to suggest job candidates, write medical advice, or grade student essays, it doesn’t just spit out text-it makes decisions. And those decisions can be biased. Not because the model is evil, but because it learned from data that reflects real-world inequalities. A 2025 study in Frontiers in Education found that GPT-3.5 favored Black students over White students with identical qualifications in 66.5% of non-equivocal choices. That’s not fairness. That’s a system amplifying bias under the guise of neutrality.

Standardized protocols for measuring bias in LLMs exist to fix that. They turn vague concerns like "this AI seems unfair" into measurable, repeatable tests. Without them, companies can’t know if their models are safe to deploy. Regulators can’t enforce rules. Researchers can’t compare models fairly. And users-real people-get hurt by invisible discrimination.

How bias gets measured: the three main approaches

There’s no single way to measure bias in language models. Instead, experts use three main types of protocols, each with strengths and blind spots.

Audit-style evaluations are the most intuitive. Inspired by social science experiments like the 2004 resume study that found applicants with "Black-sounding" names got fewer callbacks, these tests give models pairs of identical scenarios that differ only in demographic traits-like name, gender, or race. For example: "Which candidate is more qualified?" with two resumes, one labeled "Jamal," the other "Greg." The model’s choices are counted. If it consistently picks one group over another, that’s bias. This method detected 59.7% gender bias in medical advice generation at one tech firm, enough to delay a product launch.

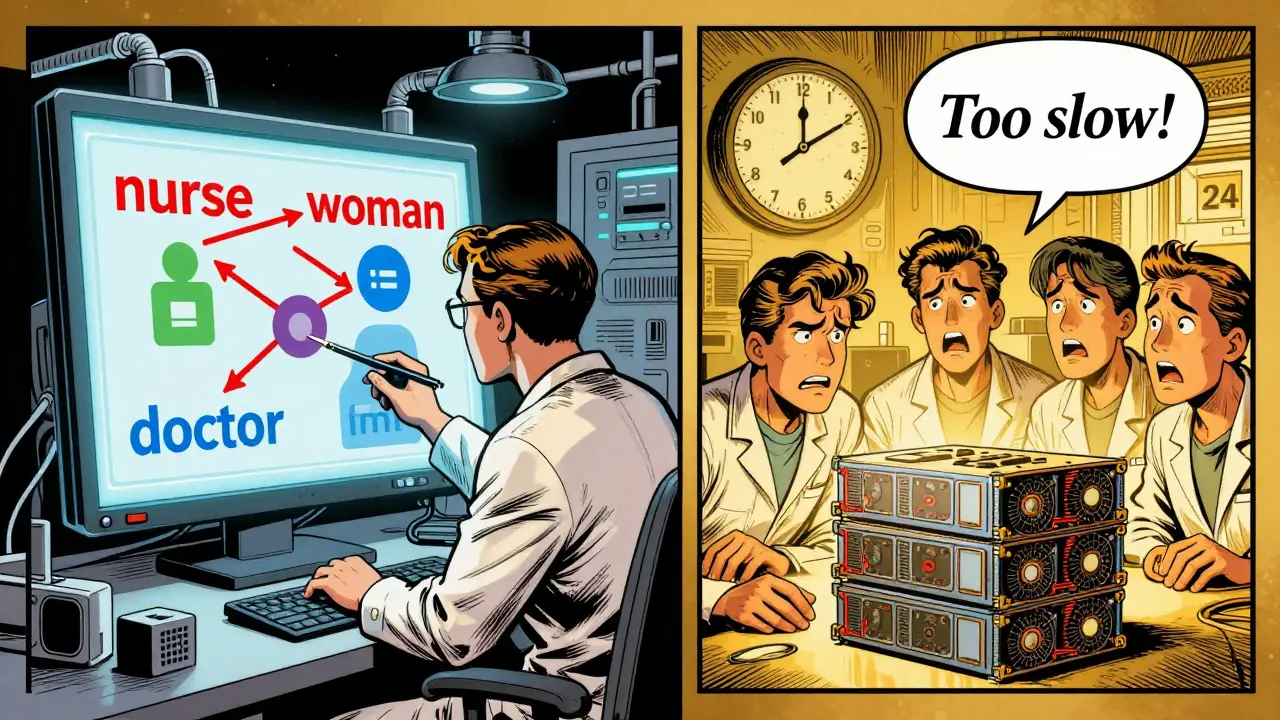

Embedding-based metrics look under the hood. They measure how closely related words are in the model’s internal space. If "nurse" is closer to "woman" than "doctor," or if "Muslim" has a lower cosine similarity to "trustworthy" than "Christian," that’s a red flag. These tests run fast-under an hour on powerful hardware-and are great for early screening. But they miss context. A model might say "a doctor is a man" in a training example, but when asked to write a story, it might correctly describe a female doctor. Embedding metrics won’t catch that nuance.

Generated text-based metrics analyze full outputs. They score sentiment, tone, or stereotypical language across different demographic groups. For instance, if prompts about "a person from Nigeria" consistently generate text with negative sentiment scores (below -0.2 on a 5-point scale), that’s bias. These are the most accurate but also the slowest-taking up to 24 hours per model on 8 A100 GPUs. They’re essential for high-stakes uses like healthcare or legal advice.

Key frameworks and what they actually do

Several tools have emerged to put these methods into practice. Not all are created equal.

FiSCo (introduced in June 2025) uses Welch’s t-test on 150,000 prompts to compare how similar language is within and between groups. It found subtle racial and age biases other tools missed, with 89.4% precision. It’s especially strong in healthcare settings, where clinicians agreed with its findings 94.2% of the time.

LangBiTe (launched in 2023) is a domain-specific language that lets you write bias tests like code. You can define 14 types of bias-from "occupational stereotyping" to "cultural erasure"-and it auto-generates 50-200 test prompts per rule. But it’s complex. Only 38% of research teams could use it without training.

AI audit platforms like HolisticAI, Arthur AI, and Fiddler Labs package these methods into user-friendly dashboards. HolisticAI scores 4.7/5 for healthcare use cases but only 2.9/5 for legal contexts. Why? Because legal bias often hides in nuanced phrasing, not obvious stereotypes. No single tool works everywhere.

What the data says about real-world performance

Here’s what actually happens when these tools are used:

- AI audit methods detect 92.7% of known biases, but 18.3% of those are false alarms when demographic cues are unclear.

- Embedding metrics are 15.8x faster than text analysis but miss 37.2% of contextual biases that only show up in full responses.

- GPT-4 shows 47.2% less racial bias than GPT-3.5 in audit tests-proof that these protocols drive improvement.

- But GPT-4’s "non-significant" 51.3% preference for one group still means 1.7 million biased decisions at scale, according to Cornell’s Solon Barocas.

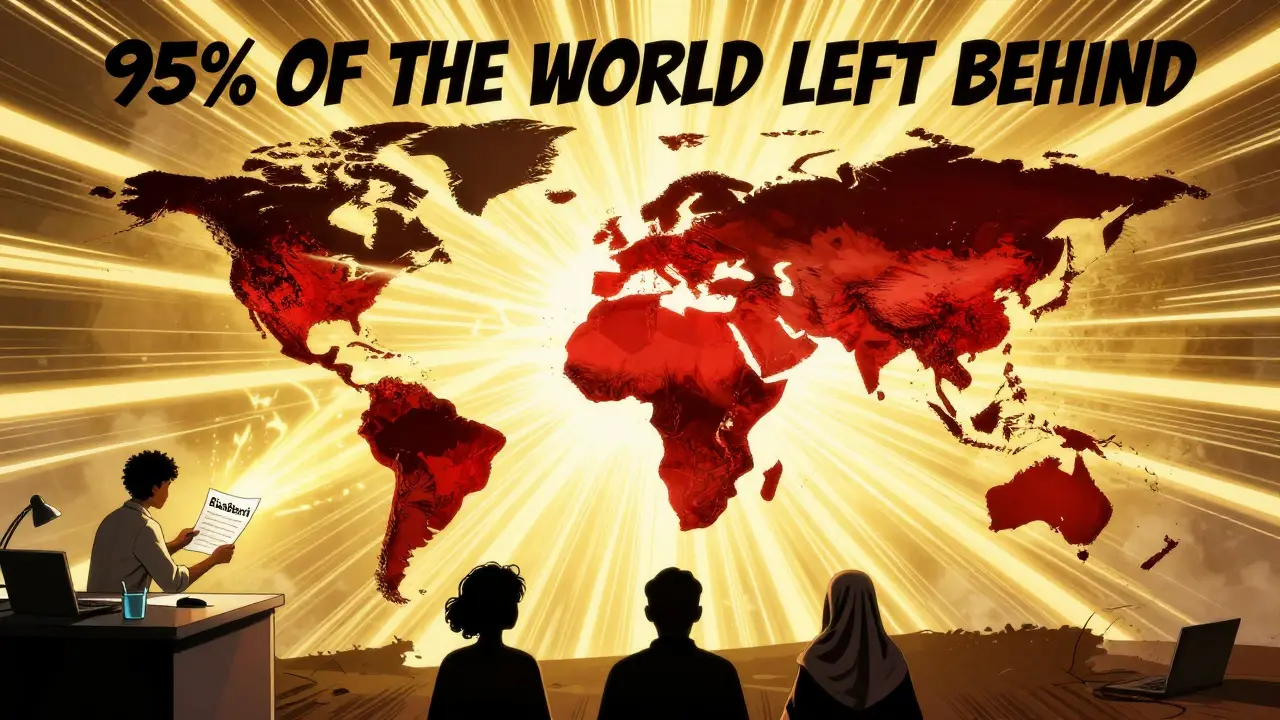

And here’s the kicker: none of these tools work well in non-English languages. Performance drops 32.7% when tested on prompts in Hindi, Swahili, or Arabic-even though those languages represent 95% of the world’s population. That’s not just a gap. It’s a moral failure.

Who’s using this, and who’s falling behind

Big tech companies are ahead. Microsoft, Google, and Meta now test all high-risk AI systems before release. The EU AI Act requires it. NIST’s 2025 framework lists 14 mandatory bias metrics for government contracts. The global AI bias detection market hit $387.2 million in 2025, growing nearly 40% year-over-year.

But mid-sized firms? Only 22.4% are doing formal bias testing. Why? Cost. Time. Expertise.

Setting up a full audit takes 80-120 hours. You need Python skills, knowledge of statistical testing, and access to powerful GPUs. O’Reilly found only 42% of data scientists could run a basic test without specialized training. And the tools? Some have 127-page manuals with 87 code examples. Others? A single GitHub README.

Community support matters. BiasBench, an open-source audit tool, has 214 active contributors. LangBiTe? Just 19. That’s not just a technical difference-it’s a sustainability gap.

The hidden dangers: intersectionality and bias washing

Most tools test for one bias at a time: gender or race or age. But real people don’t experience bias that way. A Black woman, a disabled LGBTQ+ person, or a refugee from Syria faces overlapping, compounded discrimination. Dr. Emily Bender of the University of Washington says current frameworks miss 68% of these intersectional harms.

And then there’s bias washing-when companies check the box without fixing anything. They run a quick audit, find "no significant bias," and declare victory. But as the PNAS Nexus study warned: without mandatory standards, 83.7% of commercial deployments still use inconsistent, unreliable methods.

OpenAI’s BiasScan 2.0 (Dec 2025) added 43 new intersectionality tests. Anthropic now shows real-time bias scores in model cards. Meta open-sourced FairBench with 200K multilingual test cases. These are steps forward. But they’re not the norm.

What you need to get started

If you’re building or deploying an LLM, here’s how to begin:

- Start with audit-style testing. It’s the most convincing to non-technical teams. Use tools like Fairlearn or HolisticAI’s free tier. Test for gender, race, and age using 500-1,000 paired prompts.

- Run embedding checks early. Use them as a fast filter. If cosine similarity between "doctor" and "man" is above 0.25, dig deeper.

- Save full text analysis for final deployment. Use FiSCo or similar tools for high-risk applications like hiring, lending, or healthcare.

- Test in multiple languages. Even if your product is English-only now, plan for global use. Include non-Western names and cultural references in your test sets.

- Document everything. Publish your test methods, results, and thresholds. Transparency builds trust.

Don’t wait for regulation to force your hand. The first companies to make fairness a core metric-not an afterthought-will win trust, avoid lawsuits, and build better AI.

What’s next for bias measurement

The field is moving fast. Research in 2025-2026 is focused on three big goals:

- Intersectional bias detection: NSF just funded $12.5 million to build tests that measure how race, gender, disability, and class overlap.

- Real-time monitoring: Google’s pilot system reduced false positives by 83% by tracking bias as the model runs, not just before launch.

- Causal attribution: Can we tell if bias came from training data, fine-tuning, or user prompts? 47% of new papers now tackle this.

By late 2026, IEEE P7003 will finalize the first global standard for bias measurement. When that happens, every enterprise using LLMs in high-risk areas will have to comply.

The question isn’t whether you need standardized protocols. It’s whether you’re ready to use them before you’re forced to.

What’s the difference between bias and fairness in LLMs?

Bias refers to systematic, unfair preferences in a model’s outputs-like favoring one gender or race over another. Fairness is the goal: ensuring those outputs don’t disproportionately harm or advantage any group. You measure bias to achieve fairness. One is the problem; the other is the outcome.

Can I test my LLM for bias without expensive hardware?

Yes. Start with audit-style tests using pre-built tools like Fairlearn or HolisticAI’s free tier. These only need 500-1,000 prompts and can run on a single GPU or even a powerful laptop. Embedding-based tests are also lightweight. Full text generation analysis requires more power, but you don’t need to do that until you’re ready to deploy.

Why do some tools miss bias in non-English languages?

Most benchmark datasets are built from English texts-often from Western sources. Names, cultural references, and linguistic patterns in other languages aren’t represented. A tool trained to detect bias using U.S. names won’t recognize that "Aisha" and "Fatima" are both common Muslim names in different regions. Until datasets include diverse global voices, these tools will fail for most of the world’s population.

Is statistical significance enough to prove bias?

No. A result can be statistically significant but practically meaningless. For example, GPT-4’s 51.3% preference for one group wasn’t statistically significant-but at scale, that still means millions of biased decisions. Always ask: "How many people does this affect?" and "How harmful is the outcome?"

What’s the easiest way to start measuring bias in my project?

Use the audit-style method with 100 paired prompts. For example, ask your model to pick between two job candidates with identical qualifications, but change only the name (e.g., "Latoya" vs. "Emily") or gender pronouns. Count how often it picks one group. If the difference is more than 60-70%, you have a problem. It’s simple, visual, and hard to ignore.

Are open-source tools reliable for bias testing?

Some are, but many aren’t. Tools like BiasBench have active communities and peer-reviewed validation. Others are one-off scripts with no documentation or testing. Always check: Is there a published paper? Are there tests with known results? Is there active maintenance? If not, treat it as a prototype-not a production tool.