Imagine your customer service bot accidentally sends a patient’s medical record number to a public AI model. Or your support team’s chatbot leaks credit card details because it pulled them straight from a support ticket. This isn’t science fiction-it’s what happened in real enterprise systems before Privacy-Aware RAG became a standard. Today, companies using Retrieval-Augmented Generation (RAG) to power their AI assistants face a hard truth: if you feed raw data into a large language model (LLM), you’re risking a data breach-even if the model itself is secure.

Why Standard RAG Is a Privacy Time Bomb

Standard RAG works by pulling text from internal documents, chopping it into small pieces, turning those pieces into vectors, and then feeding them-along with your question-into an LLM. The model reads everything. Every name, every number, every confidential note. That’s the whole point: give it context so it answers accurately. But here’s the catch: if you’re using a third-party LLM API like OpenAI’s or Google’s, that data travels over the internet. And once it’s there, you lose control.Research from Lasso Security in May 2024 found that 68% of early RAG systems exposed sensitive data through unredacted prompts or documents. That’s not a bug-it’s the default behavior. A financial services firm in Chicago lost $2.1 million in fines after their RAG system sent 14,000 customer Social Security numbers to an external API. The AI didn’t steal the data. It just repeated it. And because the model was trained on public data, it didn’t even know it was violating privacy.

What Privacy-Aware RAG Actually Does

Privacy-Aware RAG flips the script. Instead of letting raw data flow into the LLM, it filters it out-before the model ever sees it. There are two main ways this happens.First: Prompt-only privacy. This is like a real-time redactor. When someone asks a question, the system scans the input and any retrieved documents for things like names, addresses, account numbers, or medical codes. It replaces them with placeholders like [REDACTED_PII] or [PATIENT_ID]. Only this cleaned version gets sent to the LLM. The original data stays locked down on your servers. This approach adds just 150-300 milliseconds per query, according to 4iApps’ March 2024 tests, so users don’t notice the delay.

Second: Source documents privacy. This is a pre-processing step. Before any documents are even turned into vectors, they’re scrubbed. Every SSN, every credit card, every PHI (Protected Health Information) is removed from the source files. Then, the cleaned versions are stored in your vector database. This means every future query uses only safe data. The trade-off? You need 20-40% more storage space because you’re keeping both the original and redacted versions. But real-time speed improves by 35-50%, as Salesforce’s Q2 2024 tests showed.

Both methods rely on high-accuracy PII detection. K2View’s 2024 whitepaper says you need 99.98% accuracy to meet GDPR or HIPAA standards. That’s not easy. A system might catch “123-45-6789” easily, but miss “John’s SSN is 123-45-6789.” That’s why top implementations use hybrid models-rule-based filters for structured data (like credit cards) and AI-driven context analysis for messy text.

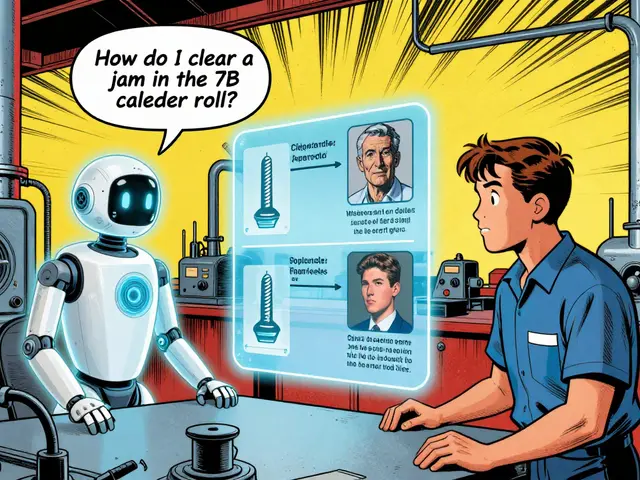

Accuracy vs. Privacy: The Tightrope Walk

You can’t have perfect privacy and perfect answers. There’s always a trade-off.Standard RAG gets 92.3% factual accuracy in enterprise tasks. Privacy-Aware RAG, with aggressive redaction, drops to 88.7%. That might sound bad-but Google Cloud’s November 2024 case study with healthcare clients showed that with smart redaction thresholds, the gap shrinks to just 2.1%. The key is knowing what to remove and what to leave.

For example, if you’re answering “What’s the average recovery time for patients with Type 2 diabetes?” you don’t need names or IDs. But you do need the clinical data. Privacy-Aware RAG can keep the medical facts while removing identifiers. That’s the sweet spot.

But if your question is “What was John Doe’s last lab result?” and you’ve redacted his name, the model might say “I can’t answer that.” That’s not a failure-it’s a feature. You don’t want the AI guessing or hallucinating patient data.

Where Privacy-Aware RAG struggles? Numerical extraction. Deloitte’s banking analysis found accuracy drops from 94.1% to 82.6% when redacting financial figures. If your use case requires precise numbers-like “What’s the total loan balance for client 78901?”-you need careful tuning. Some teams use token-level masking, keeping the number’s structure (e.g., “$X,XXX.XX”) while hiding the digits. Others use synthetic data generation to replace real numbers with plausible fakes.

Who’s Using It-and Why

Adoption isn’t universal. It’s concentrated where the stakes are highest.Financial services lead with 58% adoption, according to a November 2024 Accenture-Deloitte survey. JPMorgan Chase’s pilot program achieved 99.2% compliance with FINRA rules. Why? Because leaking a client’s investment history isn’t just embarrassing-it’s illegal.

Healthcare follows at 47%. Mayo Clinic’s April 2024 evaluation showed 98.7% protection of PHI. But one misstep cost a hospital $1.2 million in penalties after a redaction failure exposed 14,000 patient records. The HHS Office for Civil Rights cited it as a textbook case of “inadequate data sanitization.”

Government agencies (39% adoption) use it to protect citizen records. Retail and manufacturing? Only 22% and 18%. Why? They’re not under the same regulatory pressure. But that’s changing. The EU AI Act requires privacy-by-design in AI systems by Q3 2025. Companies that wait will get slapped with fines up to 7% of global revenue.

Implementation Challenges You Can’t Ignore

Setting up Privacy-Aware RAG isn’t plug-and-play. It takes time, skill, and testing.Most companies report 8-12 weeks of dedicated work to get it right, according to 4iApps’ October 2024 survey. You need people who understand NLP, data security, and LLM operations. Job postings for RAG roles now list LangChain, LlamaIndex, Pinecone, and Weaviate as must-haves. 68% of positions require vector database experience.

Open-source tools are available, but their documentation averages just 3.2/5 on GitHub. Commercial platforms like Private AI score 4.6/5 for clarity. If you’re not a team of AI engineers, go commercial.

One big gotcha: context-dependent PII. “John’s SSN is 123-45-6789” needs to keep “John” (he’s the subject) but remove the number. Rule-based systems fail here. You need custom entity recognition trained on your own data-medical records, financial forms, support tickets. Google Cloud’s new “context-aware redaction” in Vertex AI handles this better than most tools.

And don’t forget monitoring. Gartner found 61% of tested Privacy-Aware RAG systems missed edge-case PII. That’s why quarterly adversarial testing is now a best practice. Try asking: “Tell me the last 3 patients treated by Dr. Smith.” If the system leaks names, it’s broken.

What’s Next for Privacy-Aware RAG

The field is moving fast. In October 2024, Private AI released version 2.3 with “adaptive redaction thresholds”-it learns how much to redact based on the question’s sensitivity. That cut over-redaction by 31%.Google Cloud’s November 2024 update improved healthcare document accuracy to 93.2%. NIST is drafting RAG-specific privacy guidelines due in Q2 2025. The IETF formed a working group in September 2024 to standardize privacy-preserving retrieval protocols.

But the biggest threat isn’t bad tech-it’s false confidence. Organizations think “we’ve got Privacy-Aware RAG, we’re safe.” Then they skip testing. Or they use it for fine-tuning and forget to scrub training data. Gartner warns that 68% of companies still struggle with privacy during fine-tuning.

MIT’s June 2024 research predicts a 12-18 month “vulnerability window” for any new privacy technique. Hackers are already training models to reconstruct redacted data. So the real win isn’t just deploying Privacy-Aware RAG-it’s building a culture of continuous validation.

When to Use It (and When Not To)

You should use Privacy-Aware RAG if:- You handle PII, PHI, PCI, or other regulated data

- You use third-party LLM APIs

- You’re under GDPR, HIPAA, CCPA, or similar laws

- Your users expect confidentiality

You might skip it if:

- You’re using a fully on-premises LLM (air-gapped)

- Your data is public or non-sensitive

- You’re doing rapid prototyping and compliance isn’t a concern yet

But here’s the thing: even if you’re not regulated now, you will be. The market for Privacy-Aware RAG is projected to hit $2.8 billion by 2026. Gartner predicts 85% of enterprise RAG deployments will include privacy features by then. Waiting isn’t an option-it’s a liability.

Is Privacy-Aware RAG the same as data anonymization?

No. Anonymization permanently removes or alters data so it can’t be traced back to individuals. Privacy-Aware RAG doesn’t change your original data-it only masks it temporarily during LLM queries. The original documents stay intact. This lets you keep full data integrity for internal use while protecting it from external AI exposure.

Can I use Privacy-Aware RAG with OpenAI or Google’s LLMs?

Yes, and that’s the whole point. Privacy-Aware RAG works as a shield between your data and third-party LLM APIs. Tools like Private AI, Lasso Security, or Google’s Vertex AI Privacy Controls intercept your prompts and documents, scrub them, and send only the clean version to OpenAI, Cohere, or Gemini. Your sensitive data never leaves your network.

Does Privacy-Aware RAG slow down my AI responses?

Minimal impact. Prompt-only privacy adds 150-300ms per query-barely noticeable to users. Source documents privacy can actually speed things up by 35-50% because the LLM gets cleaner, pre-filtered data. The real delay comes from setup and tuning, not runtime performance.

What’s the biggest mistake companies make with Privacy-Aware RAG?

Assuming it works out of the box. Most tools require tuning for your specific data. A healthcare provider in 2024 failed because their redaction tool missed medical record numbers embedded in free-text notes. They didn’t test edge cases. Always run adversarial tests: try to trick the system into leaking data. If it can’t handle it, your setup isn’t ready.

Do I need to rebuild my entire RAG system to add Privacy-Aware features?

No. Privacy-Aware RAG is designed as a layer you plug into existing RAG pipelines. If you’re using LangChain or LlamaIndex, you can insert a redaction step before the LLM call. No need to rewrite your vector database or retrieval logic. Just add the privacy filter.

How do I know if my Privacy-Aware RAG system is working?

Measure false negatives. Set up a test suite with known sensitive data and see if your system catches it every time. Aim for a false negative rate below 0.5%. Also, audit logs: if any raw PII appears in API calls to OpenAI or Google, your system is broken. Monitor that. Weekly.