Tag: encoder-decoder transformer

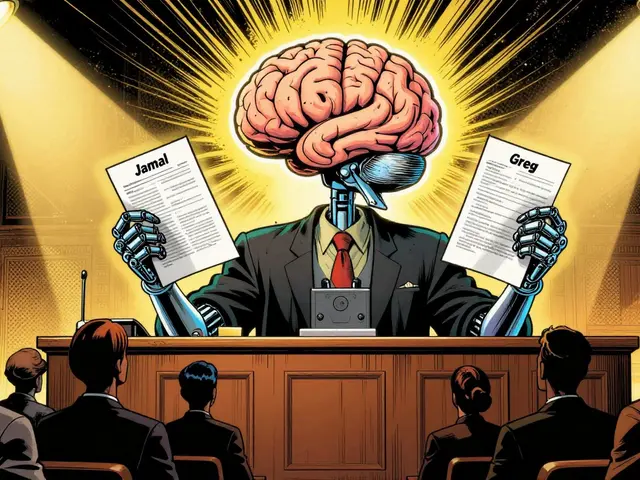

Encoder-Decoder vs Decoder-Only Transformers: Which Architecture Powers Today’s Large Language Models?

Tamara Weed, Jan, 25 2026

Decoder-only transformers dominate modern LLMs for speed and scalability, but encoder-decoder models still lead in precision tasks like translation and summarization. Learn which architecture fits your use case in 2026.

Categories:

Tags: