Think about how much video content is created every day-hours of customer support calls, surveillance footage, social media clips, training videos, product demos. Now imagine doing all the work to watch, label, summarize, and understand that content by hand. It’s impossible. But with generative AI, it’s not just possible-it’s happening right now. Companies are using AI to automatically generate captions, write summaries, and break down what’s happening in every frame of a video. This isn’t science fiction. It’s real, it’s here, and it’s changing how businesses use video.

How Video Understanding AI Works

Generative AI for video doesn’t just look at pictures. It watches motion. It listens to sound. It connects what it sees with what it hears, and then turns that into words. This is called multimodal processing. The system takes a video file-say, an MP4 or FLV-and breaks it down into frames, audio tracks, and timing markers. Each frame gets analyzed for objects, people, actions, and context. The audio is transcribed and matched to the visuals. Then, a language model, like Google’s Gemini or OpenAI’s Sora, uses all this data to generate a caption, a summary, or even a detailed scene description.

For example, if you upload a video of someone opening a box, handing a product to a customer, and saying, "This is our new warranty plan," the AI doesn’t just say "person opens box." It says: "A customer service representative opens a product box, removes a device, and explains the extended warranty terms to a customer." That’s not guesswork. That’s precision.

Modern models like Google’s Gemini 2.5 use a smarter way to handle video. Instead of processing every single pixel in every frame, they use tokenization-kind of like summarizing each frame into key data points. Gemini 3 cuts this down from 258 tokens per frame to just 70. That means faster processing, lower costs, and less strain on servers. A 10-second video used to take 25 seconds to analyze. Now it takes under 4 seconds.

Captioning: More Than Just Transcripts

Video captioning isn’t just about turning speech into text. That’s what older tools did. Generative AI goes further. It describes what’s happening even when no one is speaking. If a dog runs into frame, a car skids on ice, or a screen flashes a warning symbol, the AI captures that. This matters for accessibility, searchability, and content moderation.

Netflix used this to cut metadata creation time by 92%. Before, teams of editors watched every video to tag scenes, moods, objects, and actions. Now, AI does it in minutes. The result? Better recommendations, faster indexing, and more accurate search results. A user searching for "kitchen fight scene" or "dog jumping over fence" can find exactly that-not just because someone typed those words, but because the AI saw it.

But it’s not perfect. User u/DataEngineer2026 on Reddit reported 87.3% accuracy analyzing 12,000 hours of customer service videos-but struggled with regional accents. In one case, "I want to return this" was transcribed as "I want to reinvest this." That’s the kind of error that can cost companies money if not caught.

Summaries: Turning Hours Into Paragraphs

Imagine a 45-minute training video. Instead of watching it all, you get a 120-word summary: "The video covers onboarding procedures for new hires, including system access setup, ID badge issuance, and safety protocol review. Key steps are demonstrated with live examples. The final 8 minutes address common troubleshooting issues." That’s what generative AI can do.

Google’s Vertex AI lets you ask questions like: "What are the main actions in this video?" or "Summarize the customer’s complaint." The AI doesn’t just repeat phrases-it understands context. If a video shows a person frowning, shaking their head, and then typing "I’m not happy" in chat, the AI links those signals together. It doesn’t just say "person typed complaint." It says: "The customer expressed dissatisfaction after experiencing a delayed delivery."

Companies in healthcare, legal, and logistics are using this to auto-summarize interviews, inspections, and incident reports. One logistics firm reduced document creation time by 70% by using AI to turn surveillance footage of warehouse accidents into official incident reports.

Scene Analysis: Seeing What Humans Miss

Scene analysis is where the AI really shines. It doesn’t just describe what’s in the frame-it figures out what’s happening between frames. Is that person moving toward the exit because they’re leaving, or because they’re confused? Is the car slowing down because of traffic, or because the driver is distracted?

Google’s models now track motion patterns over time. If a person walks into a room, picks up a tool, uses it for 3 seconds, puts it down, and walks out, the AI can label that as "tool usage"-not just "person walking." This is huge for security, manufacturing, and retail analytics.

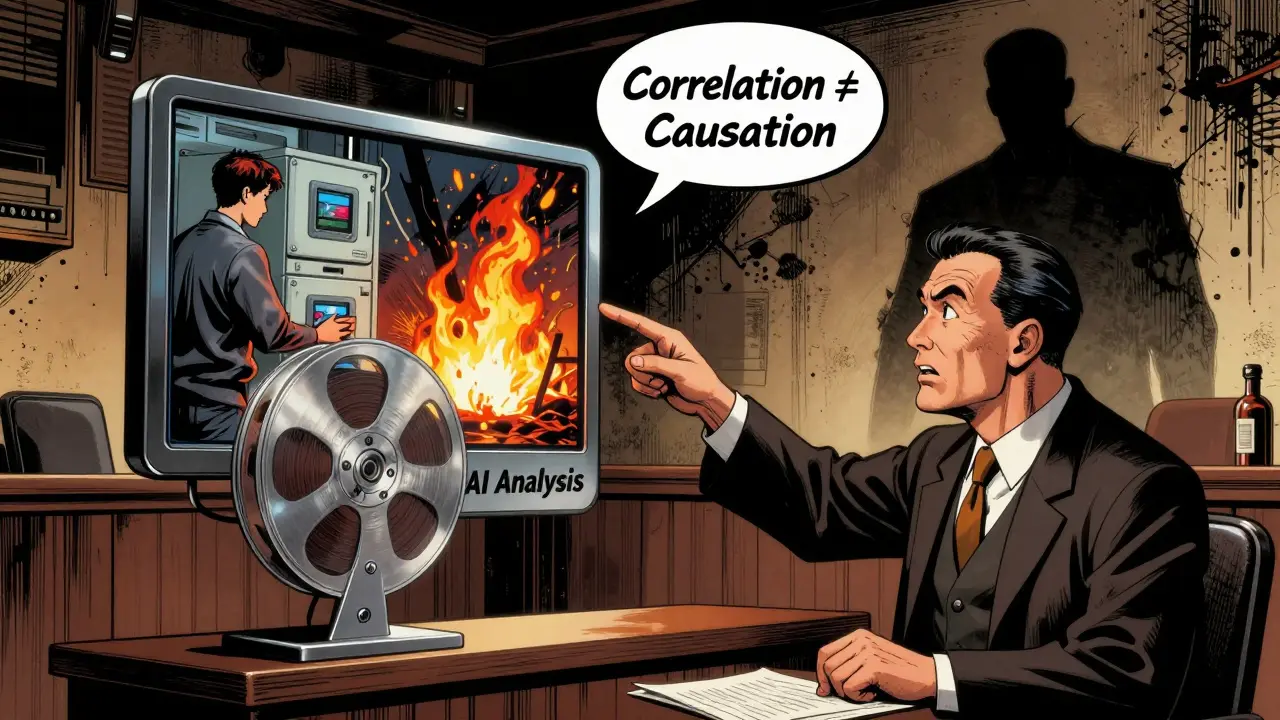

But there’s a catch. Professor Michael Chen from Stanford warns that current models confuse correlation with causation. If a video shows a person turning off a machine right before a fire starts, the AI might say, "Turning off the machine caused the fire." But that’s wrong. The machine was already overheating. The AI doesn’t understand physics or engineering-it just sees sequences. That’s why these systems still make dangerous mistakes in medical or legal settings.

What You Can Do With It Today

If you’re a developer, here’s how to get started:

- Set up a Google Cloud project and enable the Vertex AI API.

- Upload your video to Google Cloud Storage (gs://your-bucket/video.mp4).

- Use the Python GenAI client (version 2.1.4) to send the video with a prompt like: "Describe the scene, actions, and spoken words."

- For longer videos, split them into 20-second chunks. Google’s standard limit is 20 seconds per request.

- Use the timestamp output to link events to specific moments in the video.

Most teams need about 17 hours of training to get comfortable with the API. The biggest hurdle? Token usage. High-motion videos-sports, action scenes, dancing-consume way more tokens. That means higher costs. The fix? Lower the frame rate. Instead of 30fps, use 10fps. You lose some detail, but save 60% on processing costs.

Limitations and Risks

Let’s be honest: this tech isn’t flawless.

- **Speed vs. Accuracy**: Gemini 2.5-flash processes 1080p video at 3.2 seconds per second of video. OpenAI’s Sora 2 is faster at 2.8 seconds-but uses 40% more power.

- **Length Limits**: Gemini handles up to 20 seconds per request. Sora 2 goes to 60 seconds. For longer videos, you must chop them up.

- **Sound Problems**: Non-speech sounds (doors slamming, glass breaking, background music) are misidentified 22-37% of the time.

- **Cultural Bias**: Kling 2.6 works great for Mandarin videos (89.7% accuracy) but drops to 72.3% for English. That’s a problem for global teams.

- **Regulation**: GDPR now requires explicit consent to analyze facial expressions or voice patterns in EU videos. 63% of current systems aren’t compliant.

And don’t forget: if your video violates safety policies-like showing violence or hate symbols-the AI will refuse to analyze it. No warning. No explanation. Just blocked.

Who’s Leading the Pack?

As of January 2026, the market looks like this:

| Model | Max Video Length | Processing Speed | Token Efficiency | Best For |

|---|---|---|---|---|

| Google Gemini 2.5-flash | 20 seconds | 3.2 sec/sec | 70 tokens/frame | Cost-efficient enterprise use |

| OpenAI Sora 2 | 60 seconds | 2.8 sec/sec | 110 tokens/frame | Long-form analysis, physics simulation |

| Kling 2.6 | 30 seconds | 4.1 sec/sec | 95 tokens/frame | Chinese-language content |

| AWS Rekognition Video | 120 seconds | 5.0 sec/sec | 130 tokens/frame | Basic object detection |

Google holds 43.7% of the enterprise market. OpenAI is second at 28.3%. But Runway ML is growing fast in creative industries-filmmakers use it to auto-tag shots for editing. The key difference? Google’s models are built for scale. OpenAI’s are built for depth. Pick based on your needs.

What’s Next?

By September 2026, Google plans to release real-time video analysis at 30fps. That means live feeds-security cameras, Zoom calls, live streams-could be analyzed on the fly. Imagine a customer service chatbot that sees you’re frustrated before you type a word.

By Q4 2026, accuracy for standard video tasks is expected to hit 95%+. But don’t expect perfection. The biggest challenge isn’t technical-it’s ethical. Who owns the analysis? Who decides what’s "normal" behavior? Can an AI mislabel someone as suspicious just because they moved oddly?

For now, the tech is powerful, fast, and improving daily. But it’s still a tool-not a judge. Use it wisely.

Can generative AI analyze videos in real time?

Yes, but not yet at scale. Current systems like Google’s Gemini 2.5-flash process video faster than real-time (3.2 seconds per second of video), meaning a 10-second clip takes under 32 seconds. Real-time analysis (processing as the video plays) is coming by September 2026, when Google plans to launch 30fps live analysis. For now, real-time use is limited to short clips or pre-recorded footage with optimized settings.

What video formats are supported?

Most platforms support MP4, WMV, MPEG-PS, and FLV. Google’s Vertex AI accepts these formats with file sizes up to 2GB per request. For best results, use H.264 encoding at 1080p resolution. Avoid obscure codecs like AVI or MOV with non-standard compression-these often fail to process.

How accurate is AI-generated video captioning?

For standard videos with clear speech and slow movement, accuracy is 85-92%. That means 8 to 15 errors per 100 seconds of video. Accuracy drops to 65-70% for fast-action scenes, multiple speakers, or noisy backgrounds. Speech recognition accuracy is 87% for clear English, but falls to 72% for accents or background music. For critical uses like legal or medical videos, human review is still required.

Do I need to code to use video understanding AI?

Not necessarily, but it helps. You can use pre-built tools in Google Cloud Console or AWS to upload and analyze videos with a few clicks. But for custom workflows-like tagging thousands of videos or integrating with your own database-you’ll need Python and REST API knowledge. Most enterprise users spend 17+ hours learning the system before deploying it.

Is video AI analysis legal under GDPR?

It depends. If the AI analyzes facial expressions, voice patterns, or body movements, it’s considered biometric data under GDPR. That requires explicit consent from the people in the video. Without consent, processing EU citizen videos is illegal. Many companies are still not compliant-63% of current systems violate this rule as of January 2026. Always check local laws before deploying.

Final Thoughts

Video understanding with generative AI isn’t about replacing humans. It’s about giving them superpowers. A customer service team can now review 10,000 videos in a day instead of 10. A filmmaker can tag 500 clips in minutes. A factory manager can spot safety violations before they cause harm. The tech is still young. It makes mistakes. It’s expensive. But it’s getting better-fast. If you’re not using it yet, you’re falling behind. Not because everyone needs it. But because the ones who do will outpace the rest.