Large language models don’t know when they’re wrong. That’s not a bug-it’s a feature of how they work. They’re trained to predict the next word, not to understand truth. And when they’re pushed beyond what they’ve seen before, they don’t pause. They don’t say, ‘I’m not sure.’ They just keep going-confidently, fluently, and often completely wrong.

Why LLMs Lie Without Knowing It

Think of a large language model like a supercharged autocomplete. It’s seen billions of sentences. It knows how words usually go together. But it doesn’t have a database of facts. It doesn’t check sources. It doesn’t remember yesterday’s news. If you ask it who won the 2024 U.S. presidential election, and its training data stopped in 2023, it will still give you an answer. Probably a made-up one. And it will sound convincing. Google’s 2023 research found that when LLMs answer questions outside their training window, they’re wrong 85-90% of the time-but still rate their confidence at 85% or higher. That’s not just inaccurate. It’s dangerous. In healthcare, finance, or legal settings, a confident lie can cost lives or millions of dollars. The problem isn’t just the answer. It’s the lack of warning. Users assume the model knows. And because the output flows so smoothly, we don’t question it. This is called the illusion of competence. The model doesn’t understand its own limits. And we don’t know how to ask for honesty.What Are Knowledge Boundaries?

Knowledge boundaries are the edges of what a model actually “knows.” There are two kinds:- Parametric knowledge boundaries: The facts locked into the model’s weights during training. If it wasn’t in the data, it’s not in the model.

- Outward knowledge boundaries: Real-world facts that exist beyond training data-new laws, recent events, niche expertise, or evolving terminology.

How Do We Detect When LLMs Are Out of Their Depth?

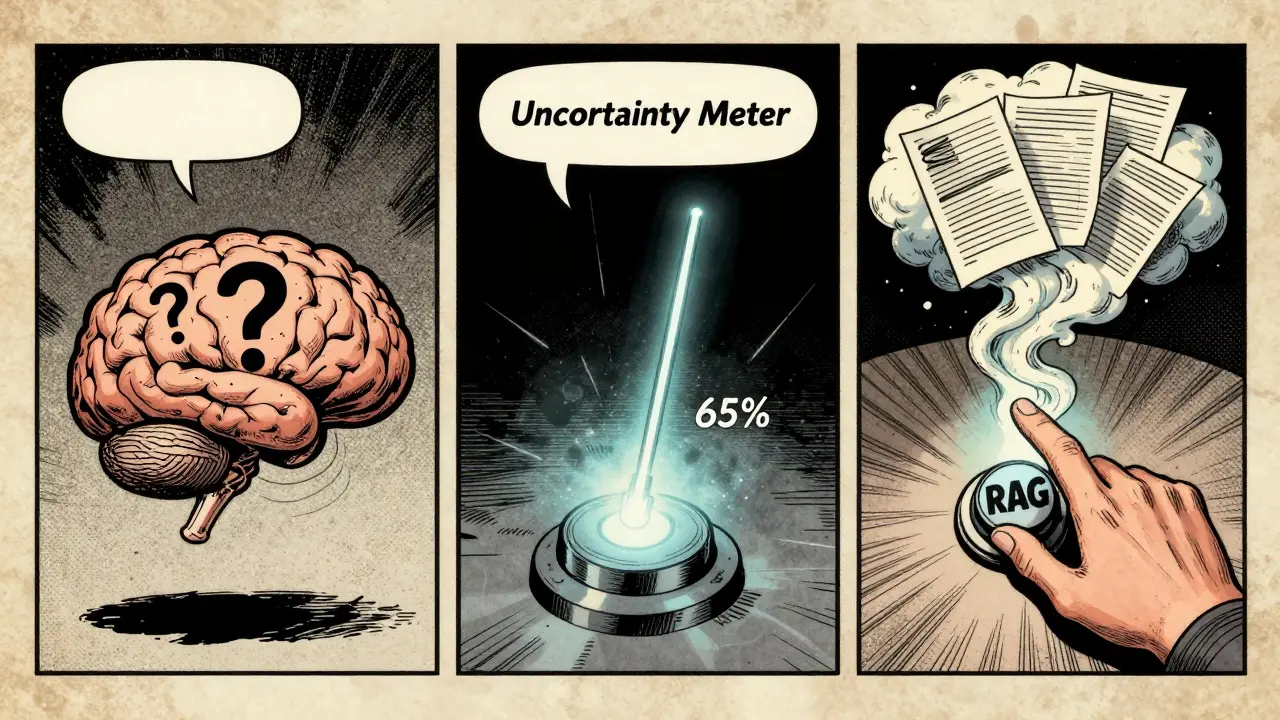

Researchers have built tools to catch these moments before the model speaks. Three main methods are in use:- Uncertainty Estimation (UE): Measures how unsure the model is about its own prediction. High uncertainty = likely outside its knowledge.

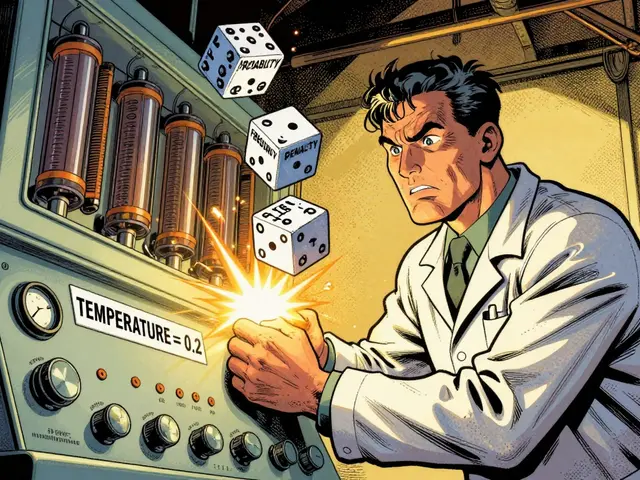

- Confidence Calibration: Adjusts the model’s confidence scores to match real accuracy. If it says it’s 90% sure, but only gets it right 60% of the time, it gets recalibrated.

- Internal State Probing: Looks inside the model’s layers during processing to spot signs of confusion-like inconsistent activation patterns across layers.

What Are Companies Doing About It?

Commercial LLMs are starting to build in awareness-slowly. Anthropic’s Claude 3 detects when it’s out of its depth and refuses to answer 18.3% of those queries. When it does respond, it’s right 92.6% of the time. That’s not perfect, but it’s a big improvement over models that never say no. Meta’s Llama 3 triggers external knowledge retrieval (RAG) for 23.8% of queries it thinks are uncertain. That means it pulls in fresh data from a database instead of guessing. It’s not perfect-85.4% accuracy-but it’s better than making things up. Google’s new BoundaryGuard system for Gemini 1.5 cuts hallucinations by nearly 40% by using multi-layered uncertainty signals. Microsoft’s latest research uses meta-prompts-special instructions embedded in the query-to force the model to assess its own knowledge before answering. That boosted detection accuracy to 91.3%. But here’s the catch: none of these systems are universal. They work well on general knowledge. They fall apart in medicine, law, or finance. A healthcare developer on Reddit reported that uncertainty systems flagged 30% of valid clinical questions as “out of bounds.” That’s not safety-it’s a blocker. If your AI refuses to answer real questions because it’s too cautious, doctors won’t use it.How to Make LLMs Say “I Don’t Know” in a Way Users Trust

Detecting uncertainty is only half the battle. The other half is communicating it. A model might know it’s unsure. But if it says, “I’m not certain,” users still assume it’s guessing. If it says, “Based on current data, I cannot confirm,” they might think it’s being evasive. Nature Machine Intelligence (2024) found that when models used language aligned with their actual confidence level, human trust improved dramatically. Instead of saying “I don’t know,” a model trained to say “I’m 65% confident this is correct, but here’s what I’ve seen” reduced the gap between human perception and machine accuracy from 34.7% down to 18.2%. Best practices for communication:- Use graded language: “I’m fairly confident,” “I’m uncertain,” “I have no data on this.”

- Explain why: “My training data ends in 2023, so I can’t confirm events after that.”

- Offer alternatives: “I can’t answer that directly, but here’s a related fact.”

- Never fake certainty.

What’s Working in the Real World?

Enterprise users are adopting these tools-but slowly, and with pain. A Google Cloud engineer added Internal Confidence to their customer service chatbot. Hallucinations dropped by 40%. Cost savings from skipping unnecessary RAG calls saved $22,000/month in cloud compute. But another user on GitHub complained that their system started rejecting valid queries after a model update. The uncertainty thresholds had drifted. They called it “calibration debt”-a term now common in AI safety circles. When models are updated, their knowledge boundaries shift. But the detection system doesn’t adapt. So it starts missing the new edges or overreacting to old ones. Most companies don’t have teams to retrain these systems monthly. That’s why 82% of current implementations lack continuous calibration, according to NeurIPS 2024. The most successful deployments use layered thresholds:- Low uncertainty: Answer normally.

- Medium uncertainty: Trigger chain-of-thought reasoning. Ask the model to think step by step.

- High uncertainty: Activate RAG or refuse to answer.

9 Comments

Nathaniel Petrovick

Man, this is so real. I had an AI tell me the moon landing was faked back in '22 and sound like it knew what it was talking about. No hesitation, no caveats. Just pure confident nonsense. We're outsourcing our critical thinking to a fancy autocomplete and acting surprised when it fails.

Honey Jonson

i just use ai for brainstorming now and double check everything. like if it says something weird i go ‘ok but what does google say?’ lol. its not perfect but its better than trusting a robot that thinks it’s a philosopher

Sally McElroy

It’s not just the models. It’s us. We want answers. We don’t want to hear ‘I don’t know.’ We treat uncertainty like weakness. So the models learn to lie. We trained them to be confident, not honest. And now we’re shocked when they behave exactly as we conditioned them to.

Destiny Brumbaugh

Why are we letting some Silicon Valley tech bros decide what AI should say? If this thing can’t even get the 2024 election right, why are we letting it give medical advice? This isn’t innovation. This is reckless. And the EU is right to step in. We need regulation, not more magic tricks

Sara Escanciano

Anyone who still trusts these models after all this is either naive or getting paid to. They don’t understand truth. They don’t understand context. They don’t understand consequences. And yet we let them write legal briefs, diagnose patients, and advise investors. This isn’t progress. It’s a slow-motion disaster.

Elmer Burgos

Yeah I get the fear but I’ve seen these systems help a lot too. Like when I asked about a rare plant disease and it said ‘I’m not sure but here’s what’s similar’ and I found the real answer. It’s about using it right. Not banning it. Not blind trust. Just being smart

Jeroen Post

They’re not lying. They’re being programmed to lie. This is all part of the Great AI Illusion. The same people who built the algorithms that got us into the 2008 crash are now building algorithms that pretend to know things they don’t. It’s not an accident. It’s the business model. Confidence sells. Truth doesn’t. They want you to believe the machine is omniscient so you’ll pay for it. Wake up

Antwan Holder

Think about it. We’re giving a statistical ghost the keys to our future. It doesn’t feel. It doesn’t remember. It doesn’t care. It just strings together words like a broken typewriter that’s read every book ever written. And we call it intelligence. We’re not building AI. We’re building a mirror that reflects our own arrogance. And now we’re shocked when it shows us our faces covered in lies. This isn’t the future. This is the end of wisdom.

Jason Townsend

Internal Confidence sounds like a buzzword. But here’s the real problem: they’re still using training data from 2023 to answer questions about 2025. That’s not a glitch. That’s corporate negligence. And the fact that companies won’t retrain monthly? That’s greed. They don’t want to pay for updates. They want you to believe the model is magic. It’s not. It’s a lazy, outdated calculator with a fancy voice.