Imagine teaching a child to read by handing them every book, article, and webpage ever written - no instructions, no answers, just text. They don’t know what’s right or wrong. But over time, they start noticing patterns: after the word "the," it’s usually a noun. After "I am," it’s often an adjective. After "2 + 2 =," it’s almost always "4." That’s how large language models (LLMs) learn. Not by being told the answers, but by guessing the next word - billions of times - across trillions of words scraped from the internet.

The Core Idea: Predicting the Next Word

Large language models don’t start with knowledge. They start with randomness. A model like Llama 3 or GPT-4 begins as a blank slate - a network of billions of interconnected numbers, randomly initialized. Its only job? Look at a sequence of words and guess what comes next.That’s self-supervised learning in its purest form. No human labels. No hand-crafted rules. The model creates its own training data from the text it’s given. If you show it: "The sky is ___," it tries to predict "blue," "cloudy," or even "giraffe." The correct answer is already in the text - the word that actually followed in the original source. The model gets feedback not from a teacher, but from the data itself. Every time it guesses wrong, its internal parameters shift slightly, nudging it closer to the pattern.

This isn’t magic. It’s statistics on steroids. The model learns that "king" is often followed by "man," and "queen" by "woman." It learns that "Paris" is frequently tied to "France," and "Einstein" to "physics." Over time, these patterns stack. It doesn’t understand meaning like a human. But it learns structure - grammar, logic, context - so well that it can write essays, answer questions, and even code.

How the Training Process Actually Works

Training an LLM isn’t one step. It’s three phases - and the first one, pretraining, is where nearly all the learning happens.Phase 1: Pretraining - This is where the model drinks from the firehose of the internet. Training datasets like those used for Llama 3 include over 15 trillion tokens - that’s words, punctuation, symbols - pulled from books, websites, forums, code repositories, and more. Before training even begins, engineers clean the data: removing duplicates, filtering out spam, scrubbing personally identifiable info. Then, they feed the text into the model in chunks.

The model doesn’t read like you or I. It sees text as numbers. Each word becomes a vector - a list of hundreds of numbers that represent its meaning in context. The Transformer architecture, introduced by Google in 2017, lets the model process all these vectors at once. Unlike older models that read word by word, Transformers look at the whole sentence in parallel. That’s why training can happen on thousands of GPUs simultaneously.

During pretraining, the model constantly practices masked language modeling. A sentence like "The cat sat on the ___" might have "mat" hidden. The model guesses the missing word. It gets scored on how close it was. After trillions of these tiny predictions, it builds a statistical map of language. By the end of pretraining, the model can predict the next word with 60-75% accuracy on unseen text. That might sound low, but it’s enough to generate coherent, fluent output.

Phase 2: Fine-tuning - Pretraining gives the model general language skills. But it can’t follow instructions yet. That’s where supervised fine-tuning (SFT) comes in. Engineers provide thousands of examples: "Write a poem about the ocean," and the correct response. The model adjusts again - this time to match human-style responses. This phase is small compared to pretraining, but critical. Without it, the model sounds like a confused encyclopedia.

Phase 3: Reinforcement Learning from Human Feedback (RLHF) - After fine-tuning, the model still makes odd, biased, or unsafe responses. So humans rank outputs: "Which answer is more helpful?" The model learns from those rankings, pushing it toward responses that feel natural, truthful, and aligned with human intent. This is how models like Claude and GPT-4 become so polished.

Scale: Why Internet-Size Data Matters

The real breakthrough isn’t the algorithm. It’s the scale. In 2020, GPT-3 trained on 300 billion tokens. By 2024, Llama 3 used 15 trillion - 50 times more. Why does that matter?More data doesn’t just mean more facts. It means more patterns. A model trained on a small dataset might know that "dogs bark." But one trained on internet-scale data learns that "dogs bark at mail carriers," "dogs chase squirrels," "dogs are loyal," "dogs sleep on couches," and even "dogs are sometimes adopted from shelters." It learns context, nuance, cultural references. It doesn’t memorize every sentence - it learns the underlying structure.

And it’s not just quantity. Diversity matters. Training on Reddit, Wikipedia, scientific papers, and Twitter gives the model a broad, messy, realistic view of language. That’s why LLMs can switch between writing a legal brief and a joke about cats. They’ve seen it all.

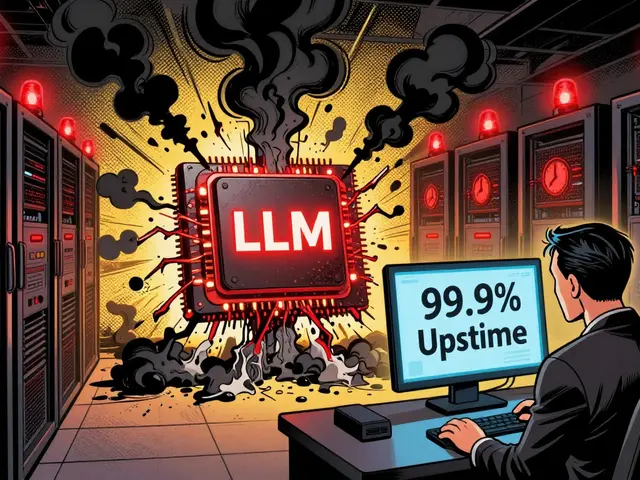

Costs and Consequences

But this scale comes at a price. Training GPT-3 used an estimated 3,640 petaflop/s-days of computing power. Llama 3? Over 15,000. That’s equivalent to running a supercomputer for months. The carbon footprint? One study estimated GPT-3’s training emitted 552 metric tons of CO2 - roughly the same as 120 gasoline-powered cars driven for a year.And then there’s the data itself. LLMs are trained on content scraped from the web - including copyrighted books, private emails, and personal social media posts. In 2023, researchers found that GPT-2 could reproduce verbatim snippets of training data 0.1% of the time. That’s rare - but when it happens, it’s legally and ethically messy.

Worse, the internet is full of bias, misinformation, and toxicity. A 2023 study from the Allen Institute found that 42% of LLM responses on medical questions contained hallucinations - made-up facts that sounded plausible. When trained on unfiltered data, models absorb and amplify societal flaws. Dr. Emily Bender called this the "stochastic parrot" problem: the model repeats patterns without understanding truth.

What Works - and What Doesn’t

Self-supervised learning is the backbone of every major LLM today. GPT-4, Claude 3, Gemini, Llama 3 - they all use it. Why? Because it’s the only way to build broad, flexible language understanding without manually labeling billions of examples.Compare that to supervised learning, where every input needs a human-labeled output. That’s how spam filters work - and it’s impossible at internet scale. Unsupervised learning, which just finds clusters in data, can’t capture the structure of language nearly as well.

But self-supervised learning alone isn’t enough. It gives you a smart encyclopedia. Not a helpful assistant. That’s why companies combine it with fine-tuning and human feedback. The best systems use self-supervised pretraining as a foundation - then layer on precision.

Enterprises are already feeling the impact. A 2024 O’Reilly survey found that 68% of companies using LLMs fine-tune them on their own data - legal documents, customer service logs, medical records. One team reported 22% better accuracy on medical queries after fine-tuning Llama 3. But they also said the initial training cost was too high for small teams. That’s why most users don’t train models from scratch. They use APIs - OpenAI’s, Anthropic’s, or open-source models like Llama 3 downloaded from Hugging Face.

The Future: Beyond Text

What’s next? The next generation of models won’t just read text. They’ll see images, hear audio, and understand video - all using the same self-supervised approach. Google’s Gemini 1.5 Pro already handles 1 million tokens of context - that’s over 700 pages of text. OpenAI’s rumored GPT-5 could have 1.2 trillion parameters. Meta plans to release Llama 4 in 2026, likely even larger and more multilingual.But scaling alone won’t fix the big problems: factual accuracy, bias, and copyright. Researchers are exploring new methods - like filtering training data more carefully, using synthetic data, or building models that admit when they don’t know something. The EU’s AI Act, effective in 2026, will require companies to disclose training data sources for large models. The U.S. Copyright Office is already investigating whether training on copyrighted material counts as infringement.

One thing is clear: self-supervised learning at internet scale isn’t going away. It’s too powerful. The question isn’t whether we’ll keep using it - but how we’ll make it safer, fairer, and more efficient. The models are learning. Now it’s up to us to guide what they learn.

8 Comments

Jen Deschambeault

This is the most beautiful thing I’ve ever read about AI. It’s like watching a baby learn to speak by overhearing a thousand conversations at once. No lectures. No flashcards. Just pure pattern absorption. I get chills thinking about it.

Kayla Ellsworth

So let me get this straight - we trained a machine to mimic human language by feeding it the entire internet’s dumpster fire, and now we’re surprised it spits out nonsense? Groundbreaking.

Soham Dhruv

man i just read this and i’m like wow. the way it learns by guessing next words is wild. like your brain does it when you’re reading and you finish someone’s sentence before they say it. but this thing does it 15 trillion times. no wonder it sounds so human sometimes. also i typoed like 3 times just typing this lol

Bob Buthune

I’ve been thinking about this all day. It’s not just learning language - it’s absorbing the collective trauma of the internet. Every toxic comment, every conspiracy theory, every badly written fanfic - it’s all in there. And now it’s talking back to us like it’s our therapist. I don’t know whether to be impressed or terrified. I cried when I realized it learned sarcasm from Reddit. I’m not okay.

Jane San Miguel

The notion that self-supervised learning constitutes "understanding" is a profound epistemological fallacy. Language models are statistical artifacts, not cognitive agents. Their fluency is an illusion crafted by high-dimensional probability distributions over token sequences. To anthropomorphize their outputs is to confuse syntactic elegance with semantic substance - a mistake as antiquated as believing automata possessed souls in the 18th century.

Kasey Drymalla

they’re training these models on everything including your private texts and medical records. this is all a cover for the government to build a mind control AI. you think the "next word" thing is random? it’s programmed to make you think a certain way. watch your feed tomorrow. you’ll see what i mean

Dave Sumner Smith

15 trillion tokens? that’s not training that’s data theft. you think big tech didn’t scrape your grandma’s blog about her cat? they’re feeding the model your life. and now it’s writing your emails. who owns the words it learns from? nobody. that’s why it’s so damn creepy. it’s a ghost made of your digital corpse

Cait Sporleder

The elegance of this paradigm lies not merely in its scalability, but in its ontological humility: by relinquishing explicit instruction, the model surrenders to the emergent structure inherent in human communication. It does not seek truth - it discovers patterns. And yet, in its statistical mirroring, it inadvertently reconstructs the cultural, linguistic, and ideological sedimentation of our collective discourse. This is not intelligence as we define it - but it is a mirror, cracked and shimmering, reflecting the chaos and beauty of our linguistic ecosystem. One must ask: if the model learns bias from data, is the bias not already ours? And if it learns to lie with conviction, is it not merely echoing our own epistemic failures?