Most people think using an LLM means typing one prompt and getting a perfect answer. That worked in 2022. Today, if you’re building real systems-customer support bots, compliance pipelines, research assistants-you need more than one shot. You need structure. That’s where prompt chaining and agentic planning come in. They’re not just buzzwords. They’re two very different ways to make LLMs do complex work. And picking the wrong one can cost you time, money, and compliance headaches.

What Prompt Chaining Actually Does

Prompt chaining is like a factory assembly line for AI. You break a big task into small, fixed steps. Each step feeds its output directly into the next. No guessing. No backtracking. Just sequence.Example: You need to turn a raw customer email into a polished support response, then translate it into Spanish. Step one: LLM reads the email and writes a draft reply. Step two: Another LLM checks that draft against your brand tone guide. Step three: A third LLM translates the approved version. Each step uses only the output from the one before it. No memory. No context beyond what’s passed in.

This approach is simple, predictable, and cheap. According to AI Competence’s 2024 benchmarks, prompt chaining uses 30-40% fewer tokens than agentic systems. That means lower costs. For high-volume tasks like processing 10,000 support tickets a day, that adds up. UnitedHealth Group uses this exact method for HIPAA-compliant patient data handling. Every step is logged. Every output is checked. Auditors love it.

Developers who’ve tried it report big wins. One Reddit user, u/LLM_Engineer99, switched from an agentic system to prompt chaining for document processing. Errors dropped from 12% to 3.2%. Costs fell by 57%. Why? Because there’s no wasted computation. No loops. No retries. Just clean, linear execution.

What Agentic Planning Really Means

Agentic planning is different. Think of it as a smart assistant who doesn’t just follow orders-they think, adapt, and decide.An agentic system doesn’t just run steps in order. It remembers past actions, uses tools (like search engines or databases), and changes its plan based on what it finds. If a step fails, it doesn’t just stop. It tries again. Or finds a new path. It’s stateful. It has memory. It reflects.

GitHub’s Copilot Workspace is a real-world example. When you ask it to fix a bug, it doesn’t just guess one file to edit. It analyzes the codebase, checks dependencies, identifies related functions, and decides how many files need updating. It might search documentation mid-task. It might backtrack if the first fix breaks something else. That’s agentic behavior.

These systems are powerful-but expensive. They use 2.5 to 3.5 times more computational resources than chaining. Microsoft’s AutoGen team reports agentic workflows require 15-25+ API calls per task, compared to 3-5 for chaining. That’s not just cost. It’s latency. It’s complexity.

And debugging? Hard. One Stack Overflow user in finance said: “I spent three days trying to figure out why my agent kept rewriting the same section of code. There was no clear trail.” That’s the trade-off. You get adaptability, but you lose visibility.

When to Use Each Pattern

You don’t choose based on what’s cooler. You choose based on what your task needs.Use prompt chaining when:

- Your steps are known ahead of time

- You need auditability (legal, compliance, or internal review)

- You’re processing high volumes of similar inputs

- You’re working in regulated industries (healthcare, finance, government)

Use agentic planning when:

- The task changes based on input-there’s no fixed path

- You need to search, compare, or synthesize unknown data

- You’re doing research, analysis, or creative problem-solving

- You can afford higher cost and complexity for better results

Take scientific research. A data scientist on HackerNews said agentic AI saved her 14 hours per paper analysis. Why? Because each paper is different. The agent adjusted its search strategy based on what it found. Chaining wouldn’t have worked-it’s too rigid.

But if you’re generating 500 product descriptions for an e-commerce site? Chaining wins. You know the format. You know the rules. You don’t need the AI to improvise. You need it to execute.

Cost, Time, and Complexity Trade-Offs

Let’s talk numbers. Because this isn’t theoretical-it affects your budget.For prompt chaining:

- Implementation time: 2-3 weeks for experienced devs

- Training needed: 20-30 hours

- Cost per task: ~$0.0012 (per document processed)

- Success rate in structured tasks: 27% higher than agentic

For agentic planning:

- Implementation time: 8-12 weeks

- Training needed: 120+ hours

- Cost per task: ~$0.0039 (3.25x more than chaining)

- Success rate in complex tasks: 42% higher than chaining

LangChain’s documentation has 94% user satisfaction for chaining. For agentic patterns? Only 76%. Why? Because people struggle with state management. They forget to clear memory. They don’t handle errors. They end up with agents that loop forever or hallucinate paths.

Stanford HAI found that 68% of Fortune 500 LLM deployments started with chaining. Only after proving it worked did they add agents for specific parts. That’s the smart way. Don’t start with a Ferrari when a bicycle gets you there.

Hybrid Systems Are the Future

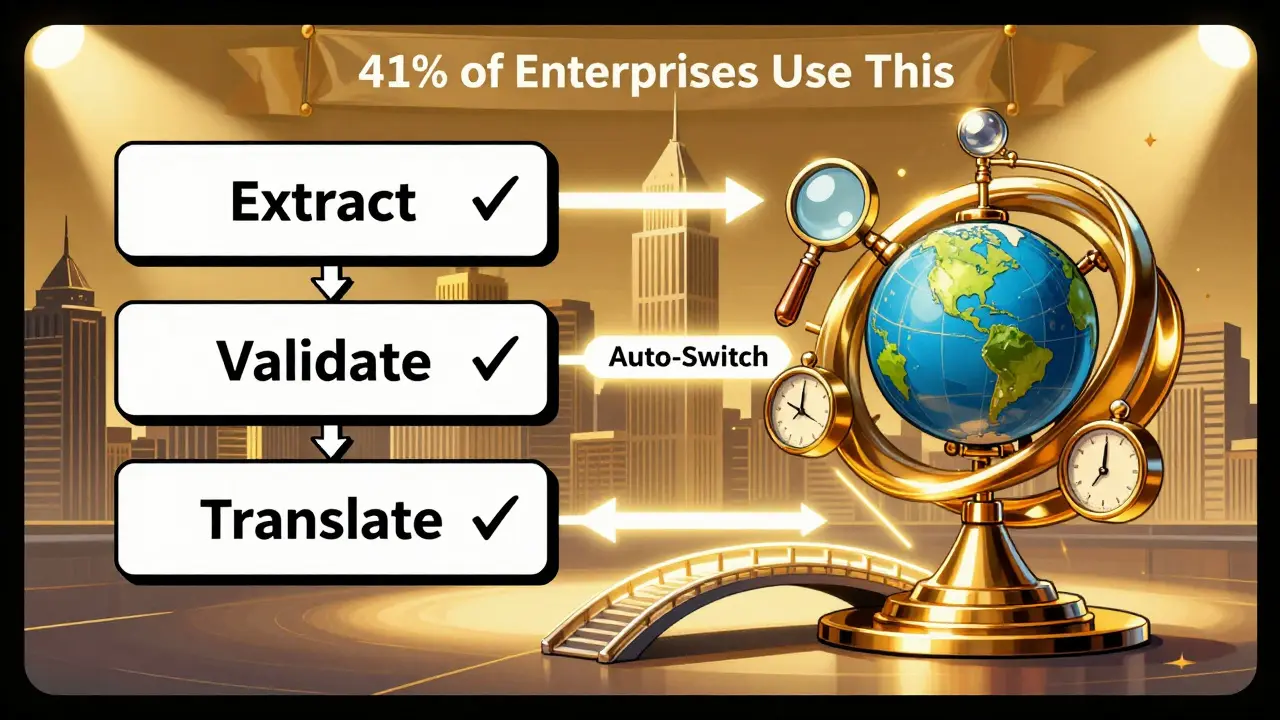

The real winners aren’t choosing one or the other. They’re combining them.AI Competence’s 2024 data shows 41% of successful enterprise LLM systems use hybrids. Here’s how:

- Use chaining for data cleanup, formatting, and validation

- Use agents for insight generation, decision-making, and exploration

For example: A financial firm uses chaining to extract and normalize transaction data from PDFs. Then, it hands off the cleaned data to an agent that looks for fraud patterns across months of activity. The agent can search external risk databases, compare against historical behavior, and flag anomalies. Chaining handles the boring, repeatable stuff. The agent handles the hard, unpredictable stuff.

LangChain’s latest update (v0.2.15) even lets you auto-switch from chaining to agent mode when confidence scores drop. That’s the future: intelligent pattern selection.

What You Should Do Right Now

If you’re starting an LLM project:- Define your task. Can you write out every step in advance?

- If yes → start with prompt chaining. Build it. Test it. Measure it.

- If it fails because the task changes too much → then add an agent.

- Never start with agentic planning unless you’re in R&D, research, or innovation.

MIT’s AI Policy Forum found that over-engineering with agents increases costs by 3.7x and delays value delivery by over five months. That’s not innovation. That’s waste.

Simple isn’t weak. It’s strategic.

Is prompt chaining just a series of prompts?

Yes-but it’s more than just stringing prompts together. It’s a structured, stateless workflow where each output becomes the next input with no memory between steps. It’s designed for reliability, not creativity. Tools like LangChain help orchestrate these sequences, but the core idea is simplicity: one step, then the next, with clear handoffs.

Can agentic systems be used for routine tasks?

Technically yes, but it’s like using a sledgehammer to hang a picture. Agentic systems are built for uncertainty and adaptation. For routine, repetitive tasks, they’re overkill. They cost more, run slower, and are harder to audit. Most enterprises that try this end up regretting it. Stick to chaining for predictable workflows.

Why do agentic systems cost so much more?

Because they do more. They make multiple API calls per task-sometimes 15-25+-to check memory, retry failed steps, search external tools, and evaluate outcomes. They also use more tokens because they generate internal reflections and reasoning traces. That adds up fast. Prompt chaining avoids all that by design: one call, one output, move on.

Are agentic systems more accurate?

Only in complex, open-ended tasks. For things like analyzing research papers, debugging code across multiple files, or adjusting strategies based on new data, agents outperform chaining by 42%. But for fixed tasks-like translating documents or filling out forms-chaining is actually more accurate because there’s no room for hallucination or deviation.

Which pattern is better for compliance?

Prompt chaining. Every step is traceable. You can log each input and output. Auditors can verify each decision point. Agentic systems, by contrast, often operate like black boxes. Their internal reasoning isn’t always visible, which makes them risky in regulated industries like healthcare or finance. That’s why 83% of financial firms use chaining for customer-facing workflows.

Do I need special tools to use either pattern?

You can build both manually with raw API calls, but it’s messy. For chaining, LangChain and LlamaIndex are popular. For agentic systems, AutoGen (Microsoft) and CrewAI are leading tools. Both integrate with GPT-4, Claude 3, and Llama 3. The tools help manage state, memory, and orchestration-but they don’t replace good design. Start simple, then add complexity only if needed.

Can I switch from chaining to agentic later?

Absolutely. In fact, that’s the recommended approach. Build your workflow with chaining first. Prove it works. Then identify the parts where the AI needs to adapt-those are the spots to hand off to an agent. Many successful systems now use chaining for data prep and agents for insight generation. It’s not an either/or. It’s a layered strategy.

4 Comments

Nick Rios

Prompt chaining is the quiet workhorse no one talks about but everyone relies on. I’ve seen teams burn months trying to make agents do invoice processing-then switched to chaining and cut costs in half. No magic, no drama. Just clean, auditable steps. It’s not sexy, but it works.

Amanda Harkins

Agentic systems feel like having a brilliant but chaotic intern. They’ll surprise you with insight… and also delete your entire database because they ‘thought it was redundant.’ I’ve been there. Chaining is the therapist who listens without judgment. Agents? They’re the one who gives you unsolicited life advice while eating your lunch.

Jeanie Watson

Why are we even debating this? Everyone knows agents are the future. Chaining is just lazy engineering dressed up as ‘practical.’

Tom Mikota

Actually-wait-did you say ‘agentic planning’? That’s not even a real term. It’s just ‘LLM with memory’ wrapped in buzzword soup. And ‘prompt chaining’? That’s just… writing prompts in sequence. Why does this feel like a TED Talk written by a marketing intern who just discovered ‘orchestration’?

Also-‘42% higher success rate’? Source? Where’s the data? I’m not buying this without a link to the paper. And why is LangChain’s satisfaction rate lower for agents? Because people don’t know how to debug them. That’s not a feature-it’s a bug.

And who’s ‘AI Competence’? Is that a real company? Or a ghostwritten blog?