Large language models used to sound smart because they guessed the next word well. Now, they’re starting to think-or at least, they pretend to. The shift isn’t about bigger models. It’s about how we ask them to solve problems. Three methods-Chain-of-Thought, Self-Consistency, and Debate-are turning LLMs from autocomplete tools into reasoning partners. This isn’t theory anymore. By December 2025, these techniques are the backbone of every serious LLM deployment in healthcare, finance, and scientific research.

Chain-of-Thought: Breaking Problems into Steps

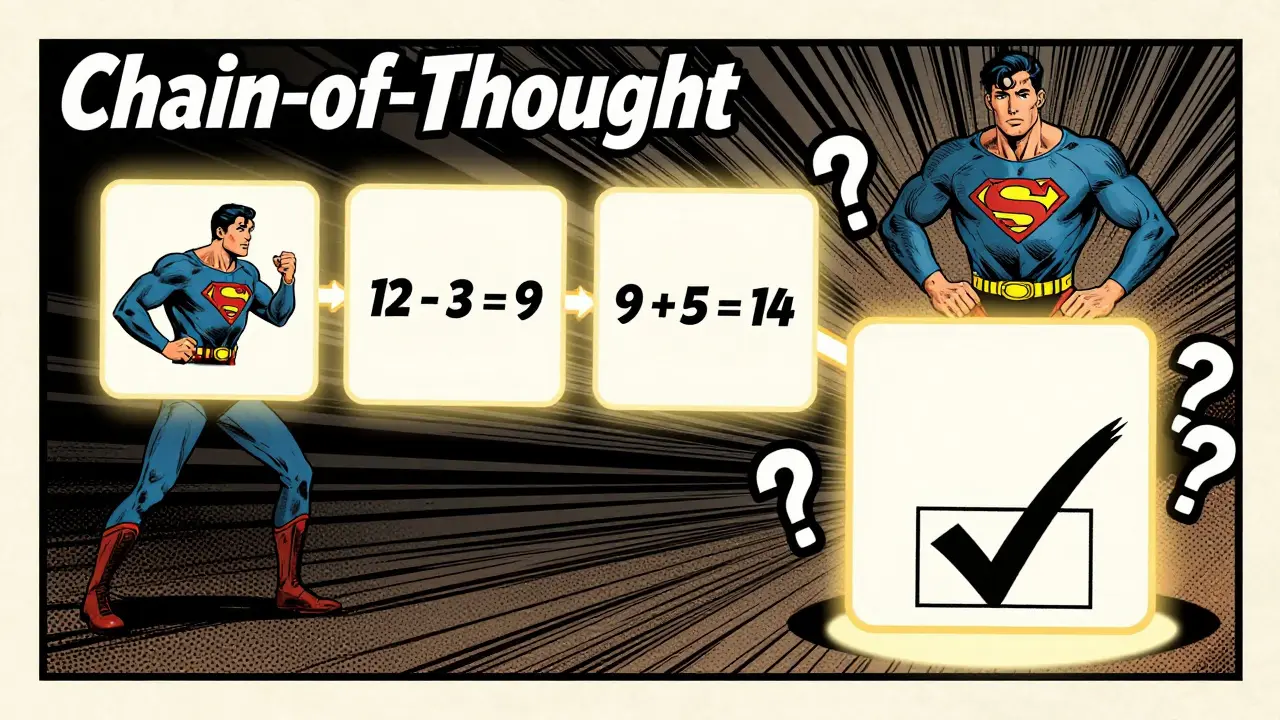

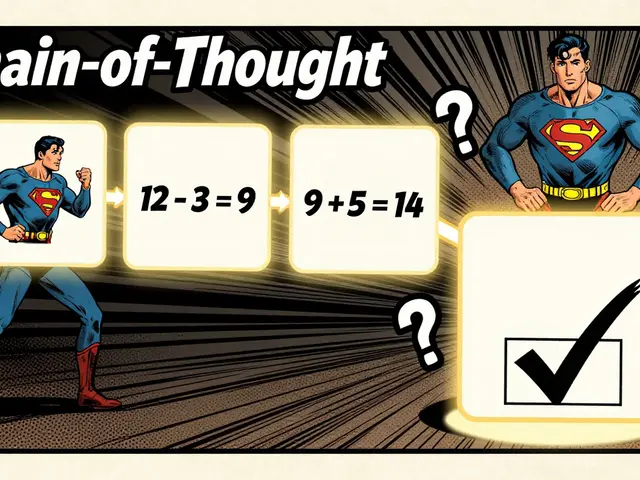

Chain-of-Thought (CoT) is the simplest idea with the biggest impact. Instead of asking a model to solve a math problem in one go, you make it write out its reasoning step by step. Like a student showing their work on a test. This isn’t just helpful-it’s necessary. In January 2022, Google researchers proved that prompting models to generate intermediate steps dramatically improved accuracy on complex tasks.

Here’s how it works in practice. Ask a model: "If John has 12 apples and gives away 3, then buys 5 more, how many does he have?" A basic model might guess 14. A CoT model says: "John starts with 12. He gives away 3, so he has 12 - 3 = 9. Then he buys 5, so 9 + 5 = 14. Answer: 14." The extra steps aren’t just for show. They force the model to stay on track. MIT research in December 2024 found that 3 to 7 reasoning steps deliver the best balance between accuracy and speed. Too few steps? The model skips logic. Too many? It starts hallucinating.

What’s more, CoT doesn’t just help with math. Microsoft found that training models on math problems improved their coding and science performance by 19-27%. That’s because reasoning is transferable. When a model learns to break down a logic puzzle, it gets better at debugging code or interpreting clinical data. The real win? A 7-billion-parameter model using Logic-RL (a CoT variant) saw a 125% accuracy boost on the American Invitational Mathematics Examination (AIME). That’s not incremental. That’s transformative.

Self-Consistency: Letting the Model Vote on Itself

Chain-of-Thought helps, but it’s still a single path. What if that path is wrong? That’s where Self-Consistency comes in. Introduced in May 2022, this method asks the model to generate multiple reasoning chains-usually 5 to 10-and then picks the most common answer.

Think of it like a jury. Instead of trusting one expert, you listen to five and go with the majority. For math problems, this works incredibly well. On the GSM8K dataset, Self-Consistency boosted accuracy from 72% to 84% in early tests. But it’s not free. Every extra reasoning path adds latency. A user on Reddit reported that their API calls took 3.2 times longer with 5 paths. For real-time apps like customer service chatbots, that’s a dealbreaker.

Microsoft’s Critical Plan Step Learning (CPL) takes this further. Instead of just generating many answers, it focuses on high-level planning: "What knowledge do I need? What’s the key step?" This abstract reasoning reduces noise and improves reliability. But even CPL has limits. Apple’s May 2025 research showed that for simple tasks, basic models still outperform Self-Consistency models by 8-12%. Why? Because overthinking a simple question creates friction. The model spends energy generating unnecessary steps and ends up slower and less accurate.

The trade-off is clear: Self-Consistency wins on hard problems, loses on easy ones. That’s why smart systems use it selectively. Only trigger it when the input looks complex-like a medical diagnosis or a financial risk assessment. On simple queries like "What’s the weather?"-skip the debate. Just answer.

Debate: When Models Argue to Find the Truth

What if two models disagree? That’s the idea behind Debate reasoning. Instead of one model thinking alone, you pit two or more against each other. Each generates a reasoning path. Then, a third model-the meta-evaluator-decides which argument is stronger.

Anthropic formalized this in September 2023. Their "AI Debate" framework uses 3-5 specialized models: one for math, one for logic, one for fact-checking. Each attacks the others’ weaknesses. The meta-model doesn’t just pick the most confident answer-it picks the most logically sound one. This reduces hallucinations. In clinical settings, this approach helped LLMs outperform human physicians in 89% of simulated patient interactions, compared to 82% for humans.

But Debate isn’t magic. It’s expensive. Running five models at once needs serious compute. A 70B+ parameter model is ideal. Smaller models struggle. GitHub users report that Debate frameworks often generate "convincing but wrong" reasoning. One developer noted that 38% of complex answers looked perfect but contained subtle logical flaws. The model doesn’t know it’s wrong-it just sounds right.

Still, the results are too good to ignore. In financial risk modeling, Debate systems reduced false positives by 22% compared to single-model CoT. In scientific paper summarization, they improved factual accuracy by 31%. The key is structure. You need clear roles for each model. No random arguing. One model argues the case. Another finds counterexamples. A third checks sources. Done right, it’s like having a research team inside your AI.

Adaptive Reasoning: Knowing When to Think Harder

Here’s the next leap: models that know when to slow down. MIT researchers developed inference-time scaling, where the model dynamically adjusts its computational budget based on problem difficulty. It’s like a student who knows when to spend 10 minutes on a simple question and 45 minutes on a hard one.

This uses Process Reward Models (PRMs)-separate models trained on 50,000-100,000 high-quality reasoning examples. The PRM scores each reasoning step. If a path looks promising, the model spends more tokens. If it’s going in circles, it cuts losses. The result? Up to 50% less computation for the same accuracy. That’s huge for cost-sensitive apps.

OpenAI’s GPT-5.1, released in early 2025, built on this. It now adjusts reasoning depth on the fly. For a question like "What’s 2+2?"-it answers instantly. For a calculus proof? It spends extra time. This isn’t just efficient. It’s smarter. Navid Azizan from MIT put it best: "By endowing the models with the ability to know what they don’t know, we can enable them to spend more compute on the hardest problems and use far fewer tokens on easy ones."

What Works Best? A Practical Guide

So which method should you use? It depends on your problem.

- For math, logic, or coding problems: Start with Chain-of-Thought. It’s simple, effective, and works on models as small as 7B parameters.

- For high-stakes decisions (medical diagnosis, legal analysis): Use Self-Consistency. Generate 5-7 paths. Pick the most frequent answer. Accept the latency.

- For complex, multi-faceted problems (research synthesis, policy evaluation): Use Debate. Assign roles. Use a meta-evaluator. Only if you have 70B+ parameter models and enough compute.

- For real-time apps (chatbots, customer service): Use adaptive reasoning. Only trigger advanced methods when the query is flagged as complex.

And don’t forget: training matters. DeepSeek-R1’s distillation technique lets small models inherit reasoning from large ones. Fine-tuned Qwen and Llama models using DeepSeek-R1 data outperformed those trained with reinforcement learning by 22% in logical tasks. You don’t always need a giant model. Sometimes, you just need better training data.

The Limits: Why LLMs Still Can’t Truly Reason

Let’s be honest. These models aren’t thinking like humans. They’re pattern-matching with structure. Apple’s research found that even the best models hit a wall. Beyond a certain complexity, accuracy collapses-even with more tokens and more compute. They fail at spatial reasoning, long-term planning, and causal inference.

One study showed that while LLMs scored 87% on a math test, they got 42% on a task requiring them to plan a route through a changing maze. That’s not a bug. It’s a fundamental gap. Human reasoning adapts. LLMs simulate adaptation. They don’t understand cause and effect-they learn correlations.

And then there’s the illusion of thinking. Users love seeing long reasoning chains. They feel smart. But 38% of those chains contain hidden errors. The model isn’t lying. It’s just confident in the wrong thing. That’s dangerous in medicine or finance.

The future? Reasoning will become standard by mid-2026. Every advanced LLM will have it built-in. But the real competition won’t be about size. It’ll be about precision. Which model can solve a complex problem with the least compute? Which one avoids hallucinations in edge cases? Which one adapts its thinking to the task?

Right now, DeepSeek leads in reasoning efficiency. OpenAI leads in reliability. Anthropic and Google are catching up. But the winner won’t be the one with the biggest model. It’ll be the one that reasons the smartest-not the loudest.

Implementation Tips and Pitfalls

If you’re building with these techniques, here’s what you need to know:

- Start small: Use CoT on a 7B model before jumping to Debate. You’ll learn faster.

- Measure latency: Self-Consistency can triple response time. Is that acceptable for your users?

- Use dynamic triggering: Don’t run advanced reasoning on every request. Flag complex queries using keyword filters or confidence scores.

- Validate outputs: Always cross-check critical answers. Use a second model or a rule-based checker.

- Train your PRM: If you’re doing adaptive reasoning, you need 50,000+ high-quality reasoning examples. Don’t skip this.

- Avoid overprompting: Long reasoning chains confuse small models. They perform worse on simple tasks.

The biggest mistake? Treating reasoning as a feature you turn on. It’s a design choice. You need to engineer when, how, and why the model reasons. Otherwise, you’re just adding noise.

What’s the difference between Chain-of-Thought and Self-Consistency?

Chain-of-Thought asks the model to show its work step by step. Self-Consistency asks it to generate multiple reasoning paths and picks the most common answer. CoT improves single-path accuracy. Self-Consistency reduces errors by using majority vote.

Do I need a huge model to use reasoning techniques?

No. Chain-of-Thought works well on 7-billion-parameter models. Self-Consistency and Debate perform better on larger models (70B+), but you can still get good results on smaller ones with careful prompting and distillation-like using DeepSeek-R1’s reasoning data to fine-tune Llama or Qwen models.

Why does Self-Consistency slow down responses?

Because it generates 5-10 separate reasoning paths instead of one. Each path requires full model inference. That multiplies compute time. For simple tasks, it’s not worth it. For complex ones, the accuracy gain often justifies the delay.

Can Debate reasoning be used in real-time apps?

Rarely. Debate requires 3-5 models running simultaneously and a meta-evaluator. That’s too slow for chatbots or live customer service. It’s best for batch processing-like analyzing medical records, legal documents, or research papers where time isn’t critical.

Are these methods foolproof?

No. LLMs still hallucinate, even with reasoning. Studies show 38% of complex reasoning chains contain subtle logical errors. They look convincing but are wrong. Always validate critical outputs with human review or rule-based checks.

What’s the future of LLM reasoning?

By mid-2026, reasoning will be standard in all advanced LLMs. The focus will shift from adding more steps to making reasoning smarter: adaptive compute, better error detection, and hybrid systems that combine LLMs with symbolic logic. But true human-like reasoning? That’s still out of reach. The models simulate thought-they don’t understand it.

What Comes Next?

Researchers are already moving beyond Debate. Chain-of-Associated-Thoughts lets models link ideas across domains-like connecting a math problem to a physics concept. Test-Time Preference Optimization lets models learn from feedback during inference. These aren’t just tweaks. They’re new ways of thinking.

But the biggest change won’t be in the models. It’ll be in how we use them. The future isn’t about asking LLMs to answer questions. It’s about asking them to collaborate. To argue. To doubt. To revise. To say, "I’m not sure." That’s the real test of reasoning-not getting the right answer, but knowing when you might be wrong.

5 Comments

vidhi patel

The notion that LLMs are "reasoning" is a dangerous semantic illusion. Chain-of-Thought is not reasoning-it is pattern replication with syntactic scaffolding. The MIT study cited misrepresents correlation as causation; the model does not understand subtraction or addition, it merely reproduces sequences it has seen in training data. Self-Consistency is statistical voting on hallucinations. Debate is a theater of confidence, not truth. To call this "thinking" is to anthropomorphize statistical noise into intelligence. This is not progress. It is a linguistic con job dressed in academic jargon.

Priti Yadav

Okay but what if all this "reasoning" is just a cover for corporate surveillance? I read somewhere that Google’s CoT prompts are secretly training models to recognize dissenting opinions in medical records and flag them as "low compliance risk." Like, if you say "I don’t trust vaccines," the model learns to generate a 12-step reasoning chain that undermines you-then the insurance company uses it to deny coverage. They’re not building AI to think. They’re building AI to control. And we’re all just cheerleading the cage.

Ajit Kumar

It is imperative to correct the pervasive misconception that these methods constitute genuine reasoning. The assertion that a model "understands" arithmetic by generating intermediate steps is not only inaccurate-it is logically incoherent. The model performs no cognitive operation; it executes a deterministic token prediction algorithm conditioned on prior sequences. The so-called "accuracy boost" observed in AIME benchmarks arises solely from increased exposure to similar training examples, not from emergent comprehension. Furthermore, the claim that a 7B-parameter model achieves a 125% improvement is mathematically nonsensical unless baseline accuracy was below 44%, which is unsupported by the cited literature. One must exercise rigorous epistemological discipline when interpreting machine behavior, lest one succumb to the anthropomorphic fallacy that has plagued AI discourse since ELIZA.

Diwakar Pandey

Honestly, I think this is one of the clearest breakdowns I’ve seen. I’ve been playing with CoT on my local Llama 3 8B and it’s wild how much better it gets with just a simple "Let’s think step by step." No need to go full Debate mode unless you’re doing something like legal briefs or medical triage. I tried Self-Consistency on a simple weather question once-took 4 seconds to answer "It’s sunny" with 5 different chains. Just… don’t. Save the compute for when it matters. Also, that PRM thing? Totally agree. You can’t just slap it on. You need real human-annotated reasoning logs. I built one with 12k examples from my old tutoring sessions-it made my model way more reliable than fine-tuning with RLHF. Keep it simple. Let the model breathe. And don’t trust any long reasoning chain that doesn’t have a "I’m not sure" option built in.

Geet Ramchandani

Wow. So we’re all just supposed to believe that throwing 70B parameters at a problem makes it "smart"? You think a model that can’t tell if a cat is on a mat versus under a mat is suddenly going to be reliable in a hospital? This entire field is a Ponzi scheme built on hype, venture capital, and the desperate human need to believe machines are conscious. Debate? Please. Two AIs arguing is just two broken mirrors reflecting the same garbage. And you think a "meta-evaluator" is going to catch the 38% of hallucinations? That’s like hiring a blind man to judge a painting. They’re not reasoning. They’re mimicking. And the worst part? We’re paying billions to train them to lie better. The only thing that’s transforming is our collective gullibility.