Large language models used to sound smart because they talked a lot. Now, they’re getting smarter because they think better. The breakthrough isn’t in bigger models or more data-it’s in how they organize their thoughts. Enter Structured Reasoning Modules: a new way to make LLMs plan, check their work, and fix mistakes before answering. This isn’t just a tweak. It’s a redesign of how AI reasons.

Why Old Reasoning Methods Are Breaking Down

For years, the go-to method for complex reasoning was Chain-of-Thought (CoT). You’d ask an LLM to show its work: step by step, like a student solving a math problem. It worked okay for simple stuff. But when problems got harder-Olympiad math, logic puzzles, planning multi-step tasks-the model would spiral. It’d write 50 lines of reasoning, repeat itself, invent facts, or get stuck in loops. And there was no way to tell where it went wrong. A 2025 study showed that on hard math benchmarks, standard CoT only got 58.7% right. That’s barely better than guessing. The problem? The model treated reasoning like a single, unbreakable stream of words. No checkpoints. No feedback. Just hope it got lucky.How Structured Reasoning Changes Everything

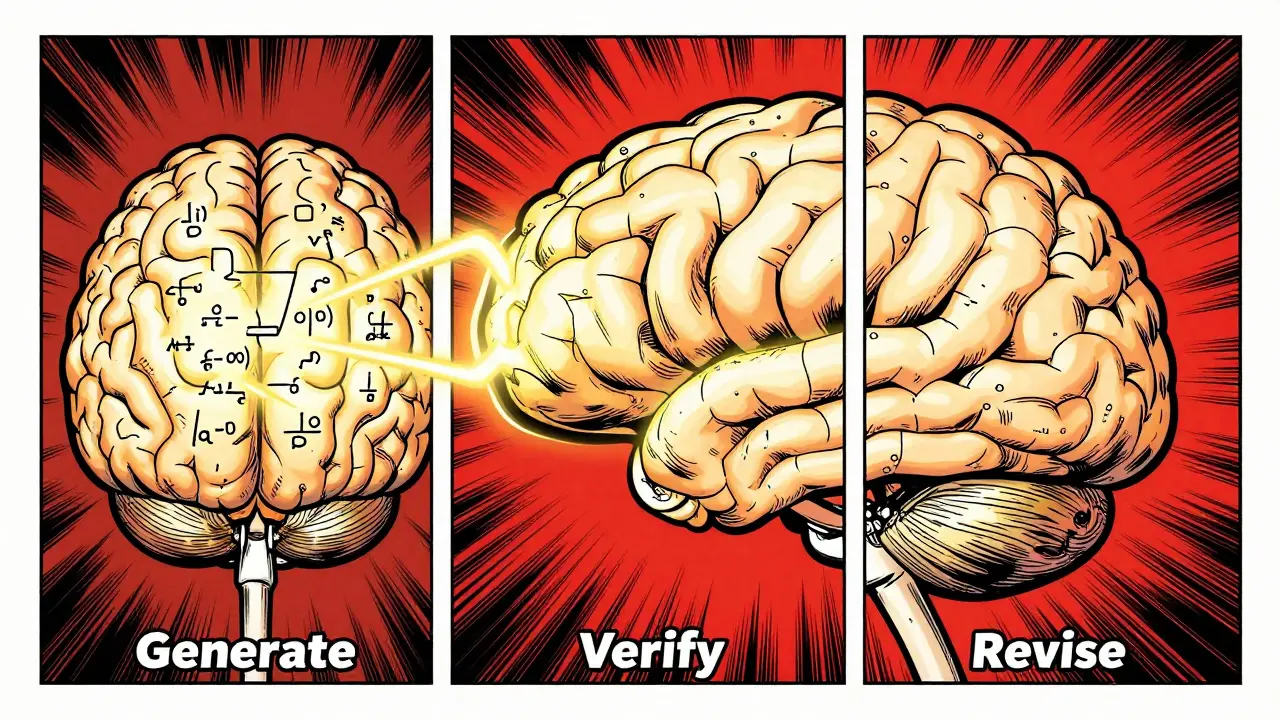

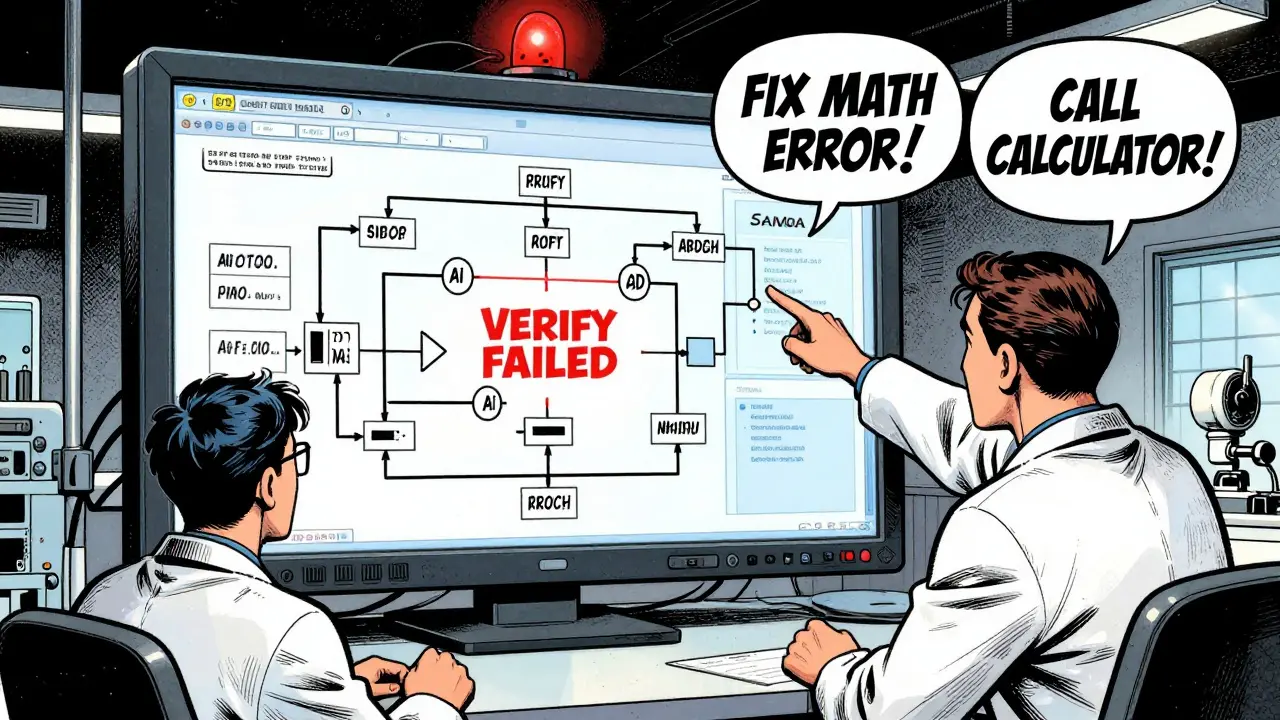

In January 2026, researchers introduced Structured Reasoning (SCR), a framework that breaks reasoning into three clear, separate stages: Generate, Verify, Revise. Think of it like writing an essay with an editor and a proofreader built in.- Generate: The model writes its first answer. Nothing new here-it’s still using standard text prediction.

- Verify: The model pauses and asks itself: “Is this right?” It doesn’t just guess. It uses a trained verification module that checks logic, math, and consistency with 94.3% accuracy.

- Revise: If the verification fails, the model doesn’t just try again randomly. It targets the exact flaw. Was the math wrong? Did it misread the question? It fixes only what’s broken.

The Secret Sauce: Dynamic Termination Supervision

One of the most powerful parts of SCR is how it decides when to stop thinking. Traditional models keep generating until they hit a token limit. That’s why you sometimes get answers that go on for pages-full of fluff and errors. SCR uses something called Dynamic Termination Supervision (DTS). It watches the verification confidence score. If the model is 95% sure it’s right, it stops. If it’s only 60% sure, it goes back to revise. No more wasted tokens. No more hallucinations dragging on. MIT’s James Wilson called this “the solution to the uncontrolled reasoning length problem.” And it works. SCR reduces redundant reasoning steps by nearly 40% compared to long-chain CoT. That means faster responses and lower costs.

Tool Use: When the Model Needs a Calculator

SCR doesn’t just think-it can reach out. The latest version lets the model use external tools during the Revise phase. Need to calculate a complex physics equation? The model can call a calculator. Need to check a date or a formula? It can query an API. This isn’t just copying a tool. It’s integrating tool use into the reasoning loop. In early tests, adding tool use improved performance on physics problems by 18.7%. Imagine an LLM that doesn’t just guess the answer to a chemistry problem-it runs the reaction simulation, checks the result, and then explains it. That’s the future.How It Compares to Other Methods

You’ve probably heard of Tree-of-Thought or Graph-of-Thought. Those try to explore many reasoning paths at once. Like a chess player thinking 10 moves ahead in every direction. It’s powerful but expensive. Each path needs its own token budget. You end up using 2x or 3x more compute. SCR does something smarter. It picks one path, then refines it. It’s like a golfer who takes one shot, sees where the ball landed, adjusts their stance, and takes a better shot. Less noise. More precision. Here’s how they stack up:| Method | Olympiad Math Accuracy | Redundant Steps | Inference Overhead |

|---|---|---|---|

| Chain-of-Thought (CoT) | 58.7% | High | Baseline |

| Tree-of-Thought (ToT) | 67.1% | Very High | +45% |

| Structured Reasoning (SCR) | 71.4% | Low | +18-22% |

| Self-Consistency | 64.2% | Medium | +25% |

Who’s Using It-and Who Should Be

Right now, adoption is concentrated in places where mistakes cost money or lives:- Financial modeling: 28 Fortune 100 companies use SCR to validate risk forecasts and investment logic.

- Scientific research: 19 labs use it to check hypotheses in physics and biology papers before submission.

- Legal analysis: 12 firms use SCR to parse contracts and flag contradictions.

Implementation: It’s Not Easy, But It’s Getting Better

Setting up SCR isn’t plug-and-play. You need:- High-quality training data: 20,000+ reasoning trajectories with verified correct and incorrect paths.

- Two-stage training: First, supervised fine-tuning. Then, reinforcement learning to optimize verification and revision.

- Extra compute: Inference takes 18-22% longer. You’ll need at least an NVIDIA A100 with 80GB VRAM.

- Expertise: Teams need experience with RL and structured data pipelines.

What’s Next?

The big names are catching on. Anthropic’s Claude 3.5, launching in March 2026, will have SCR built in. Meta’s Llama-4 roadmap includes structured reasoning as a core feature. That’s a signal: this isn’t a research toy anymore. It’s becoming standard. The next frontier? Making SCR work in messy, ambiguous domains-like creative writing, customer service, or emotional reasoning. Right now, it shines in logic and math. But the authors are already working on “uncertainty-aware verification” to handle gray areas.Final Thoughts

Structured Reasoning Modules don’t make LLMs smarter. They make them more disciplined. They force the model to slow down, check its work, and fix mistakes-not just guess harder. In a world where AI errors can mislead investors, misdiagnose patients, or misinterpret laws, that discipline isn’t a luxury. It’s a requirement. The future of LLMs won’t be about who has the biggest model. It’ll be about who builds the most reliable one. And right now, structured reasoning is the clearest path there.What’s the main advantage of Structured Reasoning over Chain-of-Thought?

Structured Reasoning breaks reasoning into distinct, evaluable stages-Generate, Verify, Revise-allowing each part to be trained and optimized separately. Chain-of-Thought treats reasoning as one long, unbroken text stream, making it impossible to isolate or fix errors. SCR improves accuracy by 12.7% on hard math problems and reduces redundant steps by nearly 40%.

Does Structured Reasoning work on all types of problems?

No. SCR excels at structured, logic-based tasks like math, physics, legal analysis, and planning. On simple problems like grade-school math, where standard CoT already hits 98% accuracy, SCR offers less than 1.5% improvement. Its strength is in complex, high-stakes reasoning where errors are costly.

How much more computing power does Structured Reasoning need?

Inference time increases by 18-22% due to the extra verification and revision steps. Training requires 35% more time than standard fine-tuning because of the multi-stage process. You’ll need at least an NVIDIA A100 with 80GB VRAM to run it efficiently.

Can I use Structured Reasoning with my current LLM like Llama-3 or Mistral?

Yes. SCR is architecture-agnostic and works with Llama-3, Qwen, Mistral, and other transformer-based models. But you’ll need to modify your training pipeline to include structured reasoning trajectories and implement the Generate-Verify-Revise logic. It’s not a drop-in module-it’s a redesign.

Is Structured Reasoning going to become standard in AI?

Almost certainly. Major players like Anthropic and Meta are building it into their next-gen models. Gartner predicts 85% of enterprise LLMs using AI for mission-critical reasoning will include structured reasoning by 2028. The EU is even considering giving it regulatory advantages because of its transparency.

What’s the biggest challenge in adopting Structured Reasoning?

The biggest hurdle is data. You need thousands of high-quality reasoning traces labeled with correct answers, verification outcomes, and revision paths. Creating these manually is time-consuming. Most teams now use strong teacher models like GPT-4 or Claude 3 Opus to generate the initial data, then add human oversight for edge cases.