Tag: LLM security

Tamara Weed, Jan, 29 2026

Model access controls determine who can use which LLMs and what they can ask for. Without them, companies risk data leaks, compliance violations, and security breaches. Learn how RBAC, CBAC, and AI guardrails protect sensitive information.

Categories:

Tags:

Tamara Weed, Jan, 27 2026

Privacy-Aware RAG protects sensitive data in AI systems by filtering out personal information before it reaches large language models. Learn how it works, where it’s used, and why it’s becoming essential for compliance.

Categories:

Tags:

Tamara Weed, Nov, 13 2025

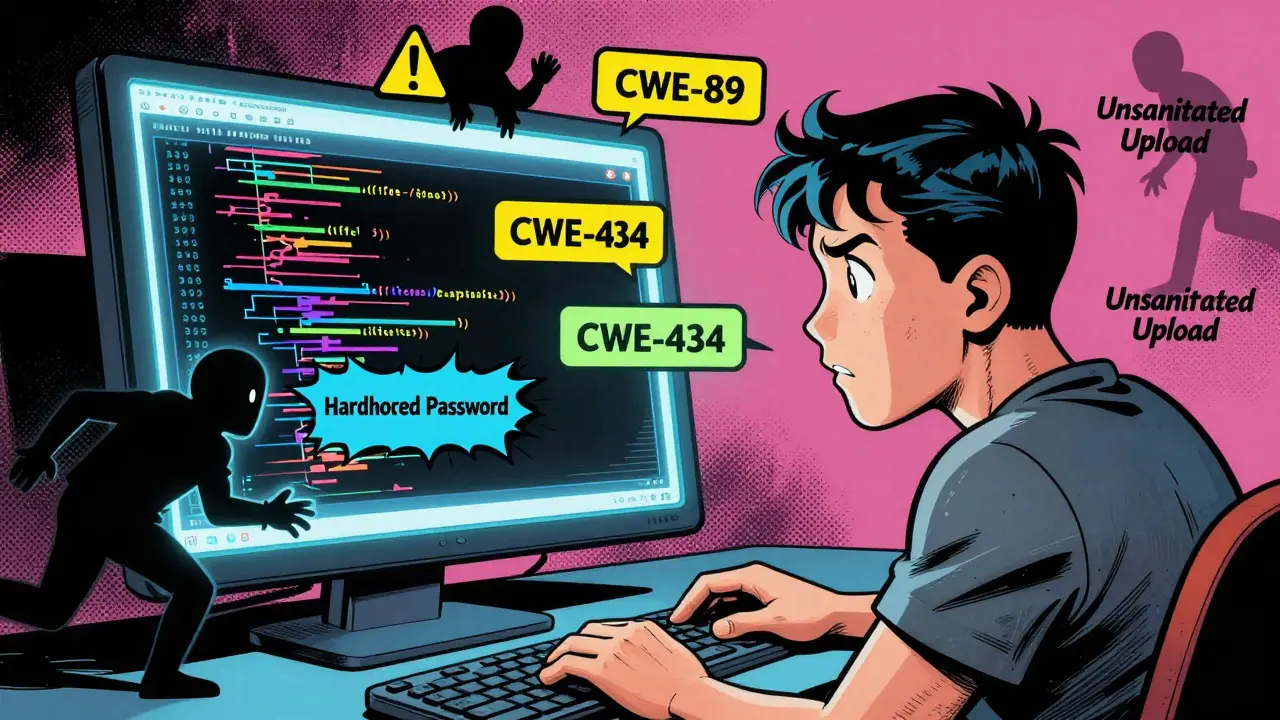

Anti-pattern prompts in vibe coding lead to insecure AI-generated code. Learn the most dangerous types of prompts, why they fail, and how to write secure, specific instructions that prevent vulnerabilities before they happen.

Categories:

Tags:

Tamara Weed, Nov, 3 2025

Learn how access controls and audit trails protect sensitive data in LLM systems. Discover what logs to capture, how roles work, and why compliance isn't optional in 2025.

Categories:

Tags: