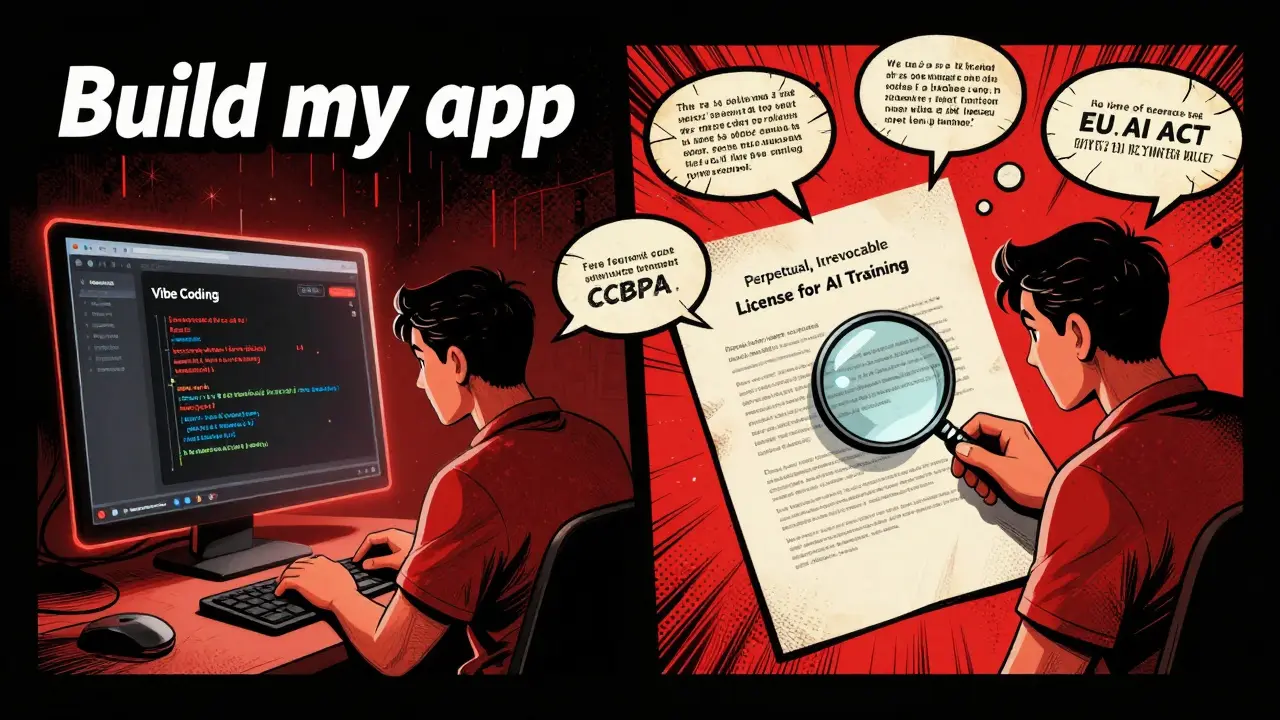

When you build an app using Vibe Coding platforms, you're not just writing code-you're handling user data. And that data comes with legal obligations. Many developers assume that because the platform generates their app’s code, it also handles their legal documents. Vibe Coding doesn’t. It’s a common mistake. And it’s getting apps rejected from the App Store and Google Play every day.

What Vibe Coding Actually Does

Vibe Coding isn’t one tool. It’s a family of AI-powered platforms: Vibe Coding AI, Vibecode App Builder, and Vibecoding Build. Each helps developers create apps faster-using prompts instead of typing every line of code. But none of them generate legally compliant Terms of Service (ToS) or Privacy Policies for your app. They generate code. Not contracts.Take Vibecode App Builder. It lets you build React Native apps by describing what you want. You say: “I need a login screen with email and password.” It builds it. But when users sign up, their emails are sent to Vibecode’s servers. Why? To train its AI. That’s not hidden. It’s in their Terms of Service: users grant Vibecode a “perpetual, irrevocable license” to use their code inputs for AI training. And once it’s used? It’s permanent. No undo button.

That’s the problem. Your app might collect emails. It might track usage. It might connect to payment processors. But if your Privacy Policy doesn’t mention that user data goes to Vibecode for AI training, Apple and Google will reject your app. Period.

The Hidden Data Flow You Can’t Ignore

Most developers think their app’s data stays inside their app. It doesn’t. Here’s what actually happens:- When a user types a query into your app built with Vibe Coding AI, that query is sent to Vibecoding’s servers.

- Your app might collect an email address for login. That email is stored in your database-but it’s also sent to Vibecode to improve their AI.

- Your app’s code is generated from user prompts. Those prompts become training data for Vibecode’s models.

- Your app uses analytics tools like Firebase or Mixpanel? Those are third-party services. Your Privacy Policy must name them.

This isn’t theoretical. In Q1 2025, 68% of app store rejections for AI-built apps were due to missing or inaccurate Privacy Policies, according to MobileDevHQ. One developer on Reddit spent three weeks building an app with Vibecode. Got rejected. Turned out his policy didn’t mention that user inputs were permanently used to train AI. He had to restart-with iubenda.

Why Generic AI Generators Fail

You might think: “I’ll just use ChatGPT to write my Privacy Policy.” Don’t. Generic AI tools don’t know the difference between Vibecode’s data practices and Firebase’s. They’ll write vague stuff like “we collect data to improve services.” That’s not enough.GDPR, CCPA, and the EU AI Act require specifics:

- What data is collected?

- Why is it collected?

- Who is it shared with?

- How long is it kept?

- Do users have the right to delete it?

And for Vibe Coding apps? You need one more: Is user data permanently used for AI training?

The California Privacy Protection Agency made it clear in February 2025: if data is used permanently for AI training, you can’t rely on opt-out. You need explicit opt-in consent. Vibecode’s Terms say their AI training effects are “irreversible.” That means your Privacy Policy must say the same thing-and get user permission before collecting data.

What Your Privacy Policy Must Include

If you’re using a Vibe Coding platform, your Privacy Policy must cover these exact points:- What data you collect: Email, IP address, device info, usage patterns, code inputs.

- Why you collect it: To run the app, to provide support, to improve performance.

- Who you share it with: Vibecode (for AI training), Firebase (for analytics), Stripe (for payments).

- How long you keep it: “We retain user emails for 2 years after account deletion.”

- User rights: “You can request access, correction, or deletion of your data.”

- AI training disclosure: “User inputs may be permanently used to train our AI models. We do not delete this data.”

- Consent mechanism: “By using this app, you consent to the permanent use of your inputs for AI training.”

Missing even one? Your app won’t pass review. Apple’s June 2025 update requires explicit disclosure of AI training practices. Google’s April 2025 update says the same. No exceptions.

The Right Way to Do It

You don’t need a lawyer. But you do need the right tool. Services like iubenda have updated their generators specifically for AI platforms like Vibe Coding. Their AI Compliance Module asks:- “Do you use an AI code generator like Vibecode?”

- “Does the platform store or train on user inputs?”

- “Are those uses permanent?”

Answer those questions. It spits out a policy that matches exactly what’s required. One developer on Capterra gave it 4.7 out of 5 stars: “Their generator asked about the irreversible AI training. Most others didn’t even mention it.”

Compare that to trying to write it yourself. You’ll miss things. You’ll assume your app is “simple.” But Apple doesn’t care how simple it is. They care if you told users what happens to their data. Period.

The Cost of Getting It Wrong

Rejection isn’t the worst part. The real cost is time. Developers who get rejected spend 8-12 hours fixing their policies, resubmitting, and waiting. Some wait weeks. Others abandon their apps.And it’s getting worse. By 2026, Forrester predicts 92% of app store rejections for AI-built apps will be due to bad privacy disclosures. The EU AI Act, effective February 2025, adds fines up to 7% of global revenue for non-compliance. This isn’t a warning. It’s enforcement.

There’s no gray area anymore. If your app was built with Vibe Coding and your Privacy Policy doesn’t say user data is permanently used for AI training, you’re violating the law. And the app stores know it.

What’s Changing in 2026

Vibe platforms are starting to respond. Vibecoding.build updated its Privacy Policy in July 2025 to include a section titled “How AI Affects Your Privacy.” Vibe Coding AI announced in January 2025 that they’ll add “enhanced user controls” for data used in training. But those changes don’t help you. They’re for the platform itself. Your app still needs its own policy.The market is shifting fast. The global privacy compliance software market hit $12.4 billion in 2024-and is growing 23.7% a year. Why? Because developers are waking up. They’re realizing that AI doesn’t replace legal work. It makes it more complex.

If you’re using Vibe Coding to build apps, your job isn’t just to make something work. It’s to make sure users know exactly how their data is used. And that means a policy that’s specific, accurate, and legally sound.

Do Vibe Coding platforms generate legally compliant Privacy Policies?

No. Vibe Coding platforms like Vibe Coding AI and Vibecode App Builder generate application code, not legal documents. They require developers to create their own Terms of Service and Privacy Policies that reflect how their app uses data-including how data flows to the Vibe platform for AI training. Relying on the platform’s own policy for your app will result in app store rejections.

Why do apps built with Vibe Coding get rejected from the App Store?

Apps are rejected because their Privacy Policies fail to disclose that user data (like code inputs, emails, or usage patterns) is permanently sent to Vibe Coding platforms for AI training. Apple and Google now require explicit, detailed disclosures about AI training practices. Generic or vague policies don’t meet these standards.

What’s the biggest mistake developers make with Privacy Policies for Vibe apps?

The biggest mistake is assuming that because the Vibe platform has its own Privacy Policy, the app built on it doesn’t need one-or can copy it. Each app must have its own policy that accurately describes its own data collection and third-party integrations, including how Vibe Coding’s AI training affects user data. Copying the platform’s policy is legally insufficient and leads to rejection.

Do I need user consent for AI training if I use Vibe Coding?

Yes. Under California’s CPPA guidance (February 2025) and the EU AI Act, if user data is permanently used for AI training-especially when irreversible-you must obtain explicit opt-in consent. Your Privacy Policy must state this clearly, and users must agree before you collect any data. Simply stating “we use data to improve services” is not enough.

Can I use ChatGPT to write my Privacy Policy for a Vibe-built app?

It’s risky. Most general AI tools don’t understand the specific data flows of Vibe platforms. They’ll miss critical details like irreversible AI training, third-party integrations, or jurisdiction-specific requirements. A 2024 study found 68% of AI-generated policies failed app store checks. Specialized tools like iubenda, which ask targeted questions about Vibe’s data practices, are far more reliable.

How long does it take to fix a rejected Privacy Policy?

Developers who get rejected typically spend 8-12 hours researching, rewriting, and resubmitting their policies, based on a 2025 survey of 142 developers. This doesn’t include waiting time for app store reviews, which can add another 1-3 weeks. Using a specialized generator like iubenda can cut this process to under 2 hours.