Archive: 2025/09

Tamara Weed, Sep, 30 2025

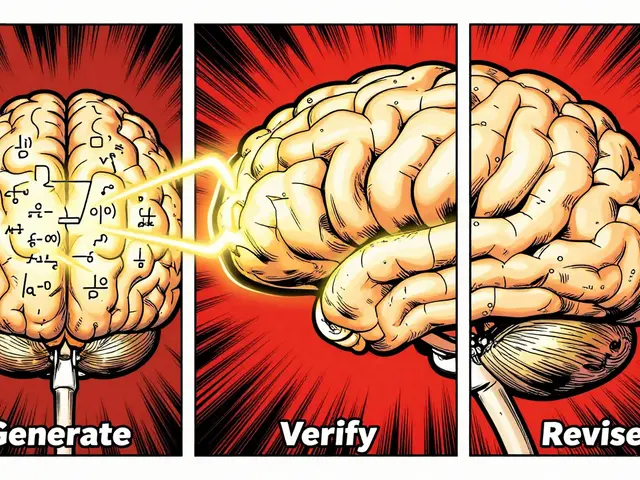

Large language models learn by predicting the next word across trillions of internet text samples using self-supervised training. This method, used by GPT-4, Llama 3, and Claude 3, enables unprecedented language understanding without human labeling - but comes with major costs and ethical challenges.

Categories:

Tags:

Tamara Weed, Sep, 17 2025

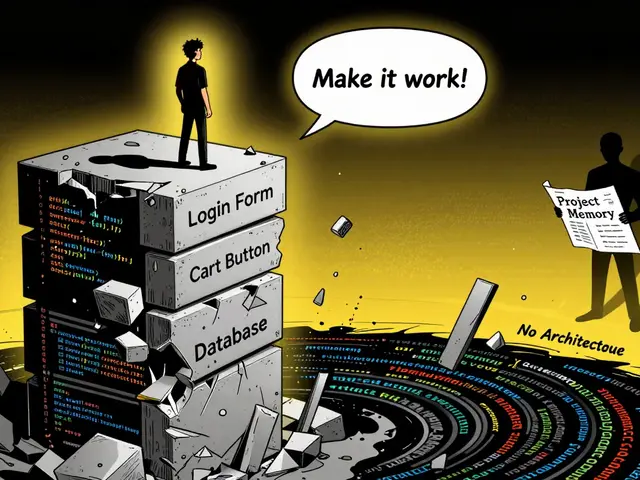

Vibe coding lets knowledge workers build custom apps using plain English instead of code, saving 12-15 hours weekly. Tools like Knack, Memberstack, and Quixy make it possible - no programming skills needed.

Categories:

Tags: