When your team starts using Large Language Models (LLMs) daily, you quickly realize something: prompt engineering isn’t just about writing good prompts. It’s about building systems that let everyone on the team produce reliable, high-quality results - without reinventing the wheel every time.

Early on, teams might start with a few prompts saved in a Notion doc or a shared Google Sheet. But as usage grows, chaos follows. One person’s "summarize this" prompt gives a two-sentence answer. Another’s gives a full report. Someone else’s output is 500 tokens long when the client asked for 150. Mistakes pile up. Time gets wasted. New hires spend weeks just figuring out what works.

That’s where prompt libraries come in.

What Is a Prompt Library?

A prompt library is a structured collection of reusable, tested, and documented prompts. Think of it like a code library - but instead of functions, you’re storing instructions for AI models. Each prompt is designed for a specific task: summarizing, classifying, translating, generating code, answering FAQs, or even writing marketing copy.

These aren’t random snippets. They follow a clear structure: context (what the model should know), instruction (what to do), and examples (how it should look). For example, a prompt for summarizing research papers might include:

- Context: "You are a scientific analyst. The user will provide a paper abstract."

- Instruction: "Summarize in 3 bullet points, under 100 words."

- Examples: One input-output pair showing a clean summary.

Advanced libraries use variables - like {{input_text}} or {{tone}} - so prompts can be reused across different inputs. A "draft email" template can adjust tone based on whether the recipient is a client, colleague, or regulator.

Why Teams Need This

Without a library, every team member works in isolation. You end up with 17 versions of "rewrite this for clarity," and no one knows which one’s best. A prompt library fixes that.

IBM Research found that teams using structured prompt libraries saw a 43% drop in output inconsistencies. That’s huge when you’re serving customers or making decisions based on AI outputs. New hires get up to speed 68% faster because they don’t have to guess what works - they just pick a template.

There’s also a cost savings. A Stanford HAI study in November 2025 showed that well-designed prompts cut token usage by up to 37%. Less tokens = lower API costs. One team at a fintech startup cut their monthly OpenAI bill by $12,000 just by optimizing their prompt templates.

And quality? Human evaluators rated outputs from templated prompts 29% higher than ad-hoc ones. That’s not magic. It’s consistency. You’re no longer relying on luck or individual skill.

How Enterprise Teams Build Them

Building a prompt library isn’t just copying and pasting. It’s engineering. Here’s how top teams do it:

- Start with an audit. Gather every prompt used in the last six months. Look for duplicates, dead ends, and patterns. What tasks keep coming up? What’s being rewritten over and over?

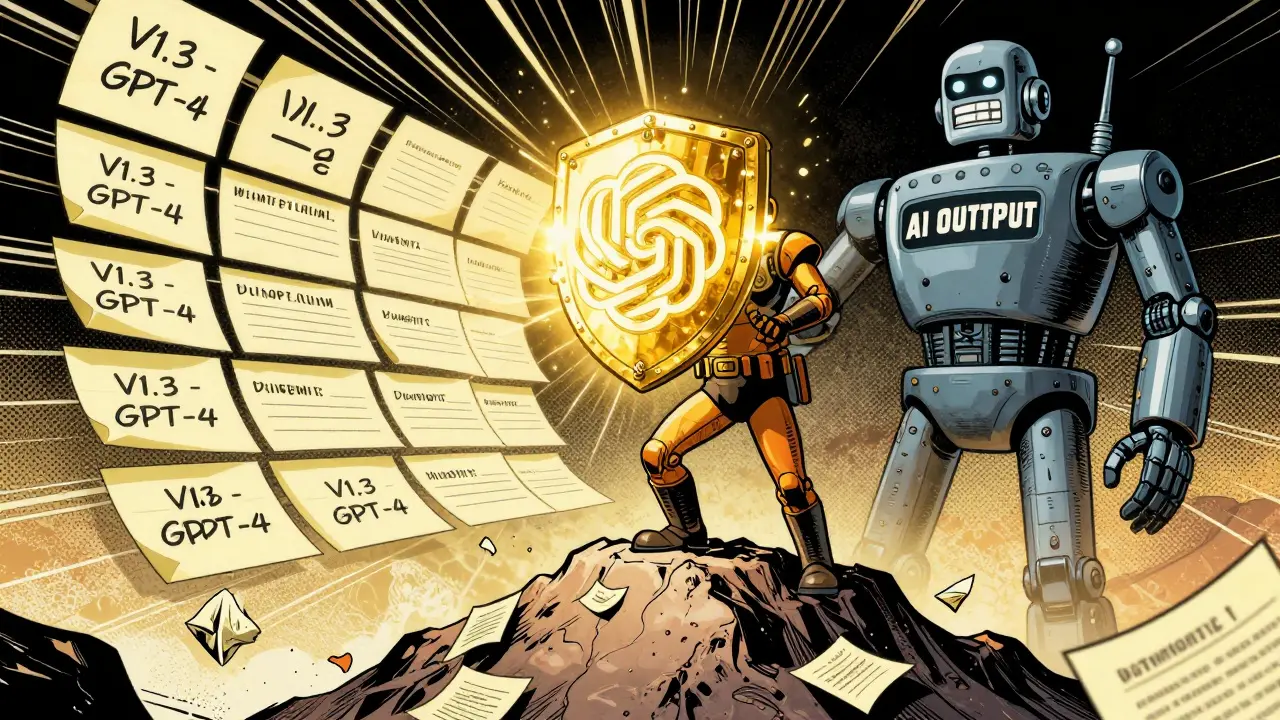

- Standardize the format. Use JSON or YAML. Each prompt gets a unique ID, a category (e.g., "summarization", "classification"), a model tag (e.g., "GPT-4", "Claude 3.5"), and a version number. Metadata matters - you’ll need it later.

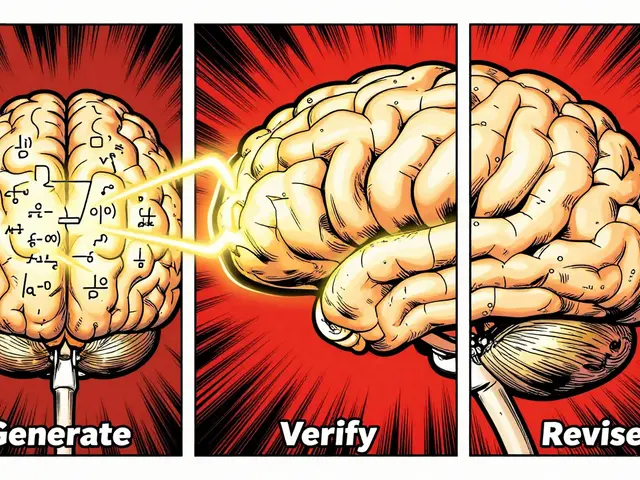

- Version control with Git. Treat prompts like code. Every change is tracked. A team member edits a prompt? They create a branch, test it, submit a pull request. Others review. Approval is required before merging. This stops bad prompts from slipping into production.

- Integrate with workflows. Plug the library into your development tools. Use API endpoints so your app pulls the right prompt automatically. Tools like PromptHub (with over 2,800 GitHub stars) support 15+ LLMs and let you test prompts directly in your CI/CD pipeline.

- Measure performance. Track success rate (how often output meets criteria), token efficiency, and consistency (using semantic similarity tools). If a prompt’s success rate drops below 80%, flag it for review.

Teams that follow this process report cutting prompt iteration time from days to hours. One Reddit user wrote: "After our library went live, we went from 3-4 days per project to 6-8 hours. No more "why is this output so weird?"

What You Can’t Ignore

It’s not all smooth sailing. Here’s what trips teams up:

- Model drift. GPT-4.5 launches. Suddenly, prompts that worked perfectly on GPT-4 now hallucinate. You need automated regression tests. AWS recommends running 50 test cases every time the model updates. If output quality drops, the system alerts you.

- Over-standardization. A prompt library isn’t a prison. Dr. Margaret Mitchell warned at NeurIPS 2025 that too much rigidity kills creativity. Sometimes, a new problem needs a fresh approach. Leave room for experimentation. Allow "sandbox" prompts outside the main library.

- Setup cost. Gartner found teams spend 80-120 hours just to get a basic system running. That’s 2-3 weeks of full-time work. If you’re a small team, start small. Pick one high-impact task - like customer support responses - and build a library for that first.

- Compatibility. Not all models respond the same. Chain-of-thought prompting only works reliably on models with over 62B parameters, according to Wikipedia’s prompt engineering entry. A prompt that works on GPT-4 might fail on Llama 3. Your library must support model-specific branches.

Market Reality: It’s Getting Real

Prompt management isn’t a niche experiment anymore. The market hit $2.3 billion in 2025, growing 47% year-over-year. Gartner says 89% of high-performing AI teams use formal prompt libraries. Only 32% of low-performing ones do.

Industries are racing to adopt. Financial services? 78% adoption. Healthcare? 71%. Why? Because regulation is catching up. The EU AI Act, which went live in January 2026, now requires documentation of all prompts used in high-risk applications - like loan approvals or medical triage. If you can’t prove what prompt produced a decision, you’re in violation.

Three types of tools dominate:

- Open-source (32% market share): PromptHub, LangChain, LlamaIndex. Free, flexible, but need engineering muscle to run.

- Enterprise platforms (45%): Google’s PromptFlow 2.0, Microsoft’s Project PromptBridge, Anthropic’s Console. Built-in A/B testing, analytics, and model switching.

- Specialized vendors (23%): Tools like PromptOps, PromptStack. Fastest growing - up 68% YoY. They handle compliance, versioning, and deployment for you.

The Future: Beyond Prompts

McKinsey predicts prompt libraries will evolve into "AI workflow blueprints" by 2027. That means not just prompts, but full contexts: data sources, model choices, validation rules, human review steps, and fallback logic.

Google and OpenAI are already moving in this direction. OpenAI announced standardized prompt formats for GPT-5 in January 2026. Google’s PromptFlow 2.0 lets you test 10 variations of a prompt side-by-side with real-time metrics.

But there’s a warning. A MIT Technology Review article in January 2026 asked: "Is the promptless era coming?" Future models might not need structured prompts at all. They might understand intent directly. If that happens, today’s libraries could become obsolete.

Still, Gartner’s take is clear: "Prompt engineering and management will become as fundamental to AI development as unit testing is to software engineering."

Right now, if your team uses LLMs seriously - and you’re not using a prompt library - you’re flying blind. It’s not about being cutting-edge. It’s about being reliable. Scalable. Safe.

Start small. Pick one task. Build one template. Test it. Measure it. Share it. Then do it again.

What’s the minimum setup for a prompt library?

Start with a simple JSON file in a Git repo. Each prompt needs: a unique ID, a task name, the model it’s for (e.g., "GPT-4"), the actual prompt text, and one example input-output pair. Add version numbers. That’s it. No fancy tools needed. Just structure and version control.

Can I use one prompt for multiple LLMs?

Sometimes, but not reliably. GPT-4, Claude 3, and Llama 3 process language differently. A prompt that works perfectly on one might fail on another. Best practice: maintain separate branches or versions for each model. Use metadata to tag which model each prompt is optimized for.

How do I know if a prompt is working well?

Track three metrics: success rate (how often output meets your criteria), token efficiency (input + output length), and consistency (how similar outputs are across multiple runs). Aim for 85%+ success rate and under 20% variation in output length. Tools like LangChain or PromptHub can auto-calculate these.

Do I need AI engineers to build this?

Not necessarily. A prompt engineer or even a skilled product manager can build a basic library. What you need is someone who understands the tasks, can write clear instructions, and knows how to use Git. You don’t need to train models - just organize and test prompts. The tech is simple; the discipline is what matters.

What’s the biggest mistake teams make?

Treating prompts as static. AI models update. Teams forget to test their prompts after a model upgrade. One team spent months building a library, then didn’t test it after GPT-4.5 launched - and their customer service bot started giving nonsense answers. Always run regression tests. Automate them if you can.