Seattle Skeptics on AI - Page 2

Tamara Weed, Jan, 20 2026

Generative AI isn't just a tool-it's a system with hidden costs. Learn how to budget for data, compliance, training, and ongoing maintenance to realize real ROI. Avoid the 73% failure rate by planning for the full lifecycle.

Categories:

Tags:

Tamara Weed, Jan, 19 2026

Learn how agent plugins and tools like Cline, Cursor, and Anima extend vibe coding to build apps with natural language. Discover real-world uses, Chrome extensions, limitations, and how to start today.

Categories:

Tags:

Tamara Weed, Jan, 18 2026

Domain-specialized generative AI models outperform general AI in healthcare, finance, and legal fields by focusing on industry-specific data. Learn how they work, where they excel, and why they're becoming the standard for enterprise AI.

Categories:

Tags:

Tamara Weed, Jan, 17 2026

Learn how LLM compression techniques like quantization and pruning let you run large models on consumer GPUs and CPUs without sacrificing performance. Real-world benchmarks, trade-offs, and what to use in 2026.

Categories:

Tags:

Tamara Weed, Jan, 16 2026

Trustworthy AI for code is no longer optional. With AI generating millions of lines of code daily, verification, provenance, and watermarking are essential to prevent security risks, ensure compliance, and maintain developer trust.

Categories:

Tags:

Tamara Weed, Jan, 15 2026

Standardized protocols for measuring bias in large language models use audit tests, embedding analysis, and text evaluation to detect unfair patterns. Learn how these tools work, which ones are most effective, and how to start using them today.

Categories:

Tags:

Tamara Weed, Jan, 14 2026

Learn how to cut LLM response times using streaming, batching, and caching. Reduce latency under 200ms, boost user engagement, and lower infrastructure costs with proven techniques.

Categories:

Tags:

Tamara Weed, Jan, 13 2026

Generative AI is now transforming healthcare, finance, manufacturing, and customer service in 2025, cutting costs, speeding up workflows, and boosting accuracy. Learn how real companies are using it-and what it takes to make it work.

Categories:

Tags:

Tamara Weed, Jan, 11 2026

Context windows in large language models define how much text an AI can process at once. Learn the limits of today’s top models, the trade-offs of longer windows, and practical strategies to use them effectively without wasting time or money.

Categories:

Tags:

Tamara Weed, Jan, 10 2026

Vibe coding speeds up development but introduces serious security risks. Learn how to triage AI-generated vulnerabilities by evaluating severity, exploitability, and impact - with real data from 2024-2025 research.

Categories:

Tags:

Tamara Weed, Dec, 29 2025

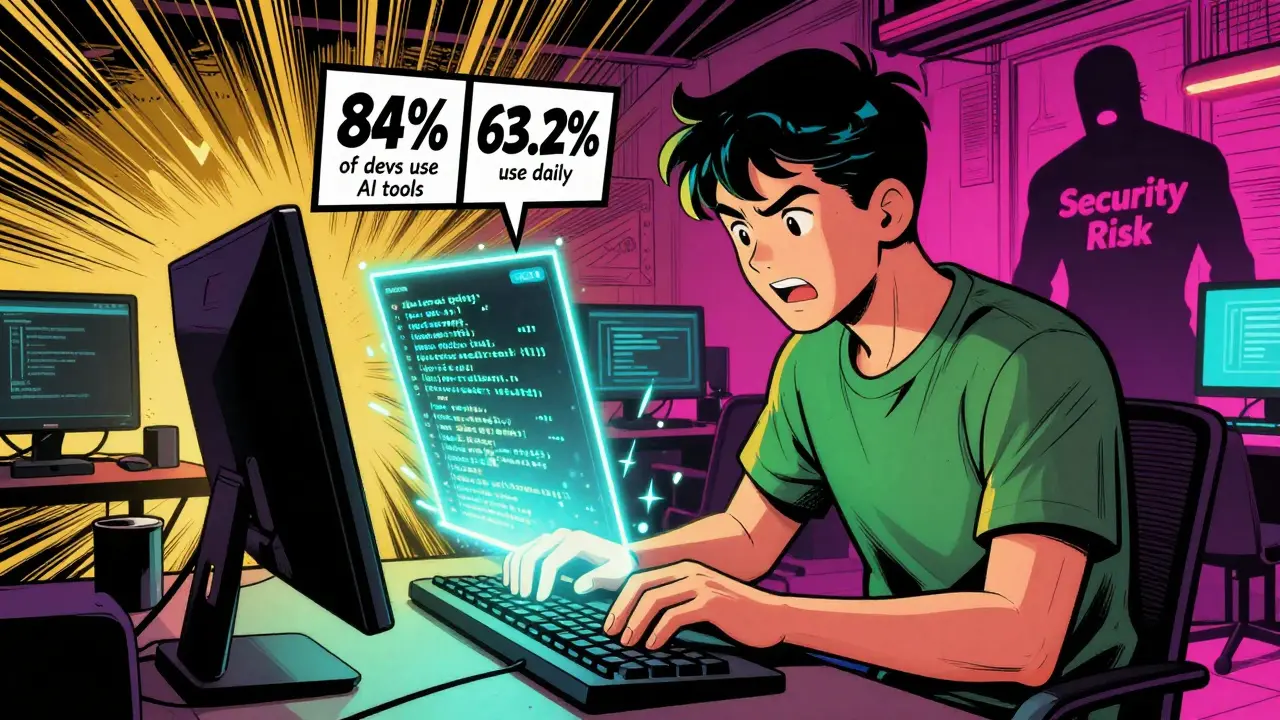

Vibe coding adoption is surging, with 84% of developers using AI tools-but only 9% trust them for production code. Learn the key stats, top platforms, security risks, and real-world usage patterns that define this new era of software development.

Categories:

Tags:

Tamara Weed, Dec, 26 2025

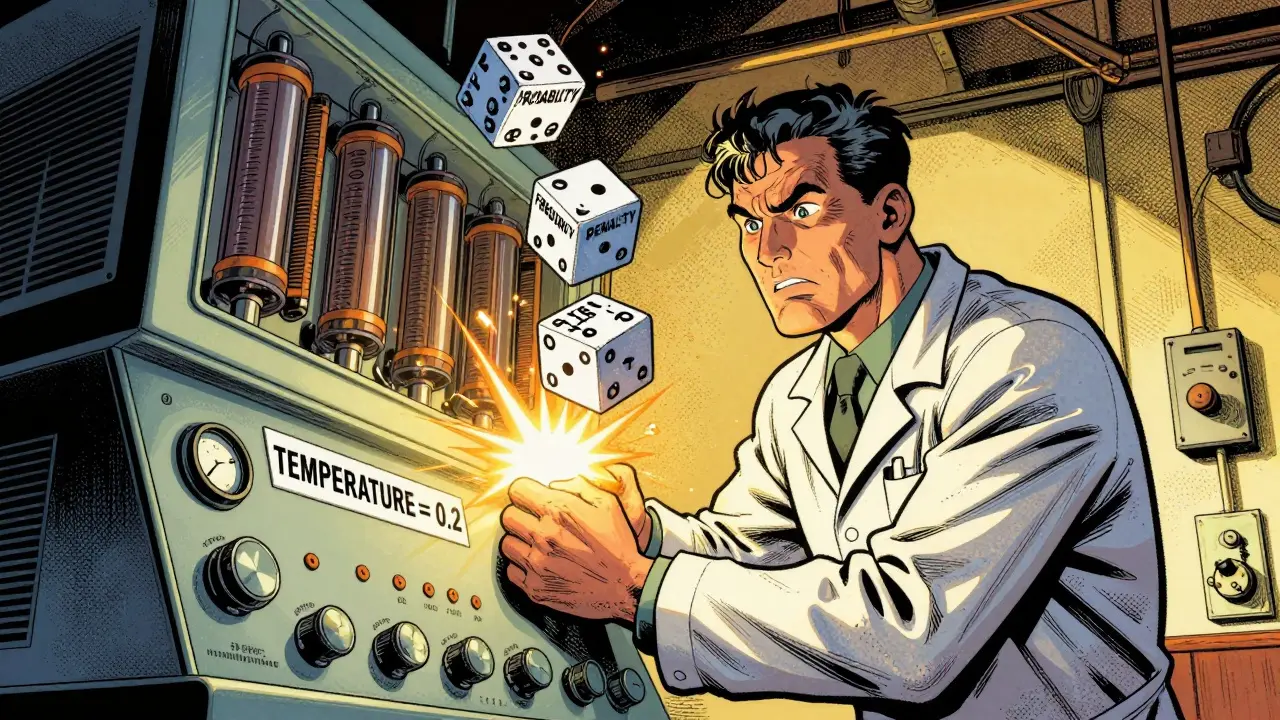

Learn how to reduce unpredictable responses from AI models using deterministic prompts, temperature settings, and other proven techniques. Get consistent, reliable outputs for production use.

Categories:

Tags: