Tag: large language models

Tamara Weed, Jan, 25 2026

Decoder-only transformers dominate modern LLMs for speed and scalability, but encoder-decoder models still lead in precision tasks like translation and summarization. Learn which architecture fits your use case in 2026.

Categories:

Tags:

Tamara Weed, Jan, 24 2026

Prompt chaining and agentic planning are two ways to make LLMs handle complex tasks. One is simple and cheap. The other is smart but costly. Learn which one fits your use case-and why most teams get it wrong.

Categories:

Tags:

Tamara Weed, Jan, 11 2026

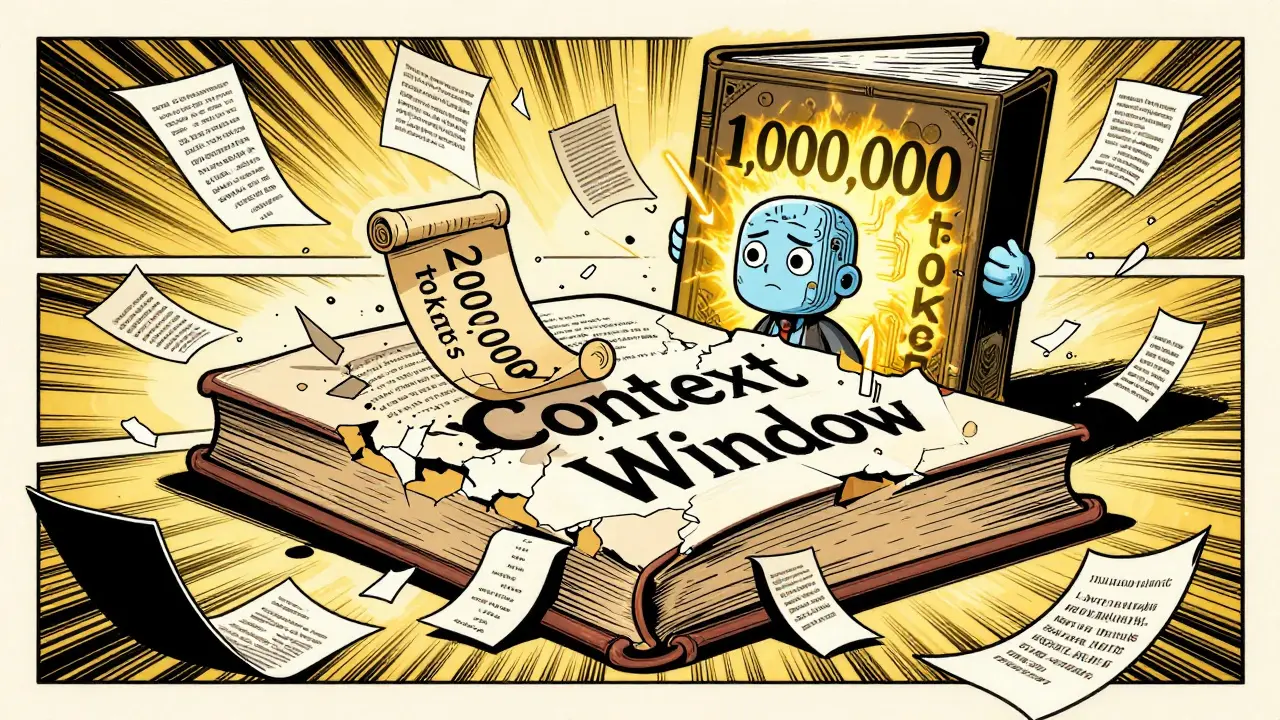

Context windows in large language models define how much text an AI can process at once. Learn the limits of today’s top models, the trade-offs of longer windows, and practical strategies to use them effectively without wasting time or money.

Categories:

Tags:

Tamara Weed, Dec, 20 2025

Parameter count in large language models determines their reasoning power, knowledge retention, and task performance. Bigger isn't always better-architecture, quantization, and efficiency matter just as much as raw size.

Categories:

Tags:

Tamara Weed, Dec, 19 2025

Large language models often answer confidently even when they're wrong. Learn how new methods detect when they're out of their depth-and how to make them communicate uncertainty honestly to build real trust.

Categories:

Tags:

Tamara Weed, Sep, 30 2025

Large language models learn by predicting the next word across trillions of internet text samples using self-supervised training. This method, used by GPT-4, Llama 3, and Claude 3, enables unprecedented language understanding without human labeling - but comes with major costs and ethical challenges.

Categories:

Tags:

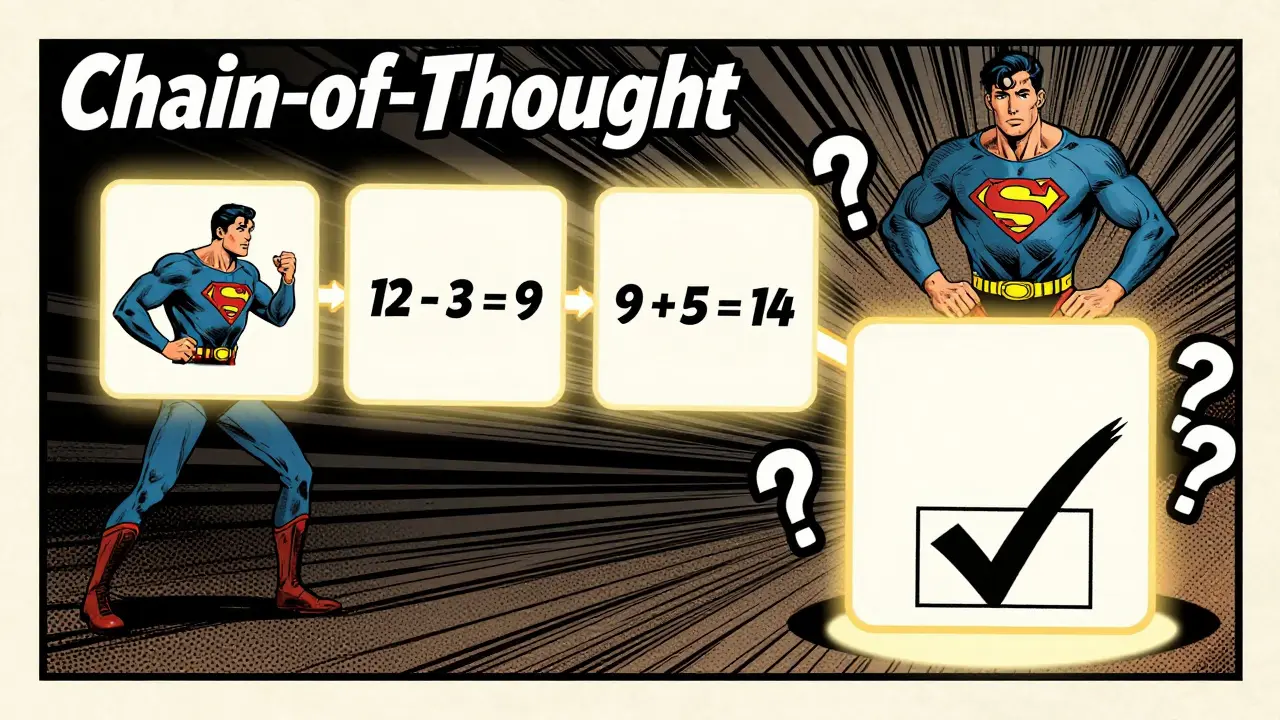

Tamara Weed, Aug, 9 2025

Chain-of-Thought, Self-Consistency, and Debate are transforming how large language models solve complex problems. Learn how these methods work, when to use them, and why they’re becoming essential in AI systems by 2025.

Categories:

Tags: